FeenoX Software Design Specification

Abstract. This Software Design Specification (SDS) document applies to an imaginary Software Requirements Specification (SRS) document issued by a fictitious agency asking for vendors to offer a free and open source cloud-based computational tool to solve engineering problems. The latter can be seen as a “Request for Quotation” and the former as an offer for the fictitious tender. Each section of this SDS addresses one section of the SRS. The original text from the SRS is shown quoted at the very beginning before the actual SDS content.

Table of contents

- 1 Introduction

- 2 Architecture

- 3 Interfaces

- 4 Quality assurance

- 5 Appendix: Downloading and compiling FeenoX

- 6 Appendix: Rules of Unix

philosophy

- 6.1 Rule of Modularity

- 6.2 Rule of Clarity

- 6.3 Rule of Composition

- 6.4 Rule of Separation

- 6.5 Rule of Simplicity

- 6.6 Rule of Parsimony

- 6.7 Rule of Transparency

- 6.8 Rule of Robustness

- 6.9 Rule of Representation

- 6.10 Rule of Least Surprise

- 6.11 Rule of Silence

- 6.12 Rule of Repair

- 6.13 Rule of Economy

- 6.14 Rule of Generation

- 6.15 Rule of Optimization

- 6.16 Rule of Diversity

- 6.17 Rule of Extensibility

- 7 Appendix: FeenoX history

- 8 Appendix: Downloading & compiling

- 9 Appendix: Inputs for solving LE10 with other FEA programs

- 10 Appendix: Downloading and compiling FeenoX

- 11 Appendix: Rules of Unix

philosophy

- 11.1 Rule of Modularity

- 11.2 Rule of Clarity

- 11.3 Rule of Composition

- 11.4 Rule of Separation

- 11.5 Rule of Simplicity

- 11.6 Rule of Parsimony

- 11.7 Rule of Transparency

- 11.8 Rule of Robustness

- 11.9 Rule of Representation

- 11.10 Rule of Least Surprise

- 11.11 Rule of Silence

- 11.12 Rule of Repair

- 11.13 Rule of Economy

- 11.14 Rule of Generation

- 11.15 Rule of Optimization

- 11.16 Rule of Diversity

- 11.17 Rule of Extensibility

- 12 Appendix: FeenoX history

- 13 Appendix: Downloading &

compiling

- 13.1 Debian/Ubuntu install

- 13.2 Downloads

- 13.3 Licensing

- 13.4 Quickstart

- 13.5 Detailed configuration and compilation

- 13.6 Advanced settings

- 14 Appendix: Inputs for solving LE10 with other FEA programs

1 Introduction

A computational tool (herein after referred to as the tool) specifically designed to be executed in arbitrarily-scalable remote servers (i.e. in the cloud) is required in order to solve engineering problems following the current state-of-the-art methods and technologies impacting the high-performance computing world. This (imaginary but plausible) Software Requirements Specification document describes the mandatory features this tool ought to have and lists some features which would be nice the tool had. Also it contains requirements and guidelines about architecture, execution and interfaces in order to fulfill the needs of cognizant engineers as of the 2020s.

On the one hand, the tool should allow to solve industrial problems under stringent efficiency (sec. 2.3) and quality (sec. 4) requirements. It is therefore mandatory to be able to assess the source code for

- independent verification, and/or

- performance profiling, and/or

- quality control

by qualified third parties from all around the world. Hence, it has to be open source according to the definition of the Open Source Initiative.

On the other hand, the initial version of the tool is expected to provide a basic functionality which might be extended (sec. 1.1 and sec. 2.6) by academic researchers and/or professional programmers. It thus should also be free—in the sense of freedom, not in the sense of price—as defined by the Free Software Foundation. There is no requirement on the pricing scheme, which is up to the vendor to define in the offer along with the detailed licensing terms. These should allow users to solve their problems the way they need and, eventually, to modify and improve the tool to suit their needs. If they cannot program themselves, they should have the freedom to hire somebody to do it for them.

FeenoX is a cloud-first computational tool aimed at solving engineering problems with a particular design basis, as explained in

- Theler, J. (2024). FeenoX: a cloud-first finite-element(ish) computational engineering tool. Journal of Open Source Software, 9(95), 5846. https://doi.org/10.21105/joss.05846

“Cloud first” vs. “cloud friendly”

In web design theory, there is a difference between mobile-first and mobile-friendly interfaces. In the same sense, FeenoX is cloud first and not just cloud friendly.

But what does this mean? Let us first start with the concept of “cloud friendliness,” meaning that it is possible to run something on the cloud without substantial effort. This implies that a computational tool is cloud friendly if it

- can be executed remotely without any special care, i.e. a GNU/Linux binary ran on a server through SSH,

- can exploit the (in principle) unbounded resources provided by a set of networked servers, and

- does not need interactive input meaning that, once launched, it can finish without needing further human intervention.

Yet, a cloud-first tool needs to take account other more profound concepts as well in early-stage design decisions. In software development, the modification of an existing desktop-based piece of software to allow remote execution is called “cloud-enabling.” In words of a senior manager, “cloud development is the opposite of desktop development.” So starting from scratch a cloud-first tool is a far better approach than refactoring an existing desktop program to make it cloud friendly.

For instance, to make proper use of the computational resources available in remote servers launched on demand, it is needed to

- have all the hosts in a particular network

- configure a proper domain name service

- design shared network file systems

- etc.

Instead of having to manually perform this set up each time a calculation is needed, one can automate the workflow with ad-hoc scripts acting as “thin clients” which would, for instance,

- launch and configure the remote computing instances, optionally using containerization technology

- send the input files needed by the computational tools

- launch the actual computational tools (Gmsh, FeenoX, etc.) over the

instances, e.g. using

mpiexecor similar to be able to either- to reduce the wall time needed to solve a problem, and/or

- to allow the solution of large problems that do not fit into a single computer

- monitor and communicate with the solver as the calculation progresses

- handle eventual errors

- get back and process the results

Furthermore, we could design and implement more complex clients able to handle things like

- authentication

- resource management (i.e. CPU hours)

- estimation of the number and type of instances needed to solve a certain problem

- parametric sweeps

- optimization loops

- conditionally-chained simulations

- etc.

Therefore, the computational tools that would perform the actual calculations should be designed in such a way not only to allow these kind of workflows but also to make them efficient. In fact, we say “clients” in plural because—as the Unix rule of diversity (sec. 11.16) asks for–depending on the particular problem type and requirements different clients might be needed. And since FeenoX itself is flexible enough to be able to solve not only different types of partial differential equations but also

- different types of problems

- coupled

- parametric

- optimization

- etc.

- in different environments

- many small cases

- a few big ones

- only one but huge

- etc.

- under different conditions

- in the industry by a single engineer

- in the academy by several researchers

- as a service in a public platform

- etc.

then it is expected nor to exist a one-size-fits-all solution able to handle all the combinations in an optimum way.

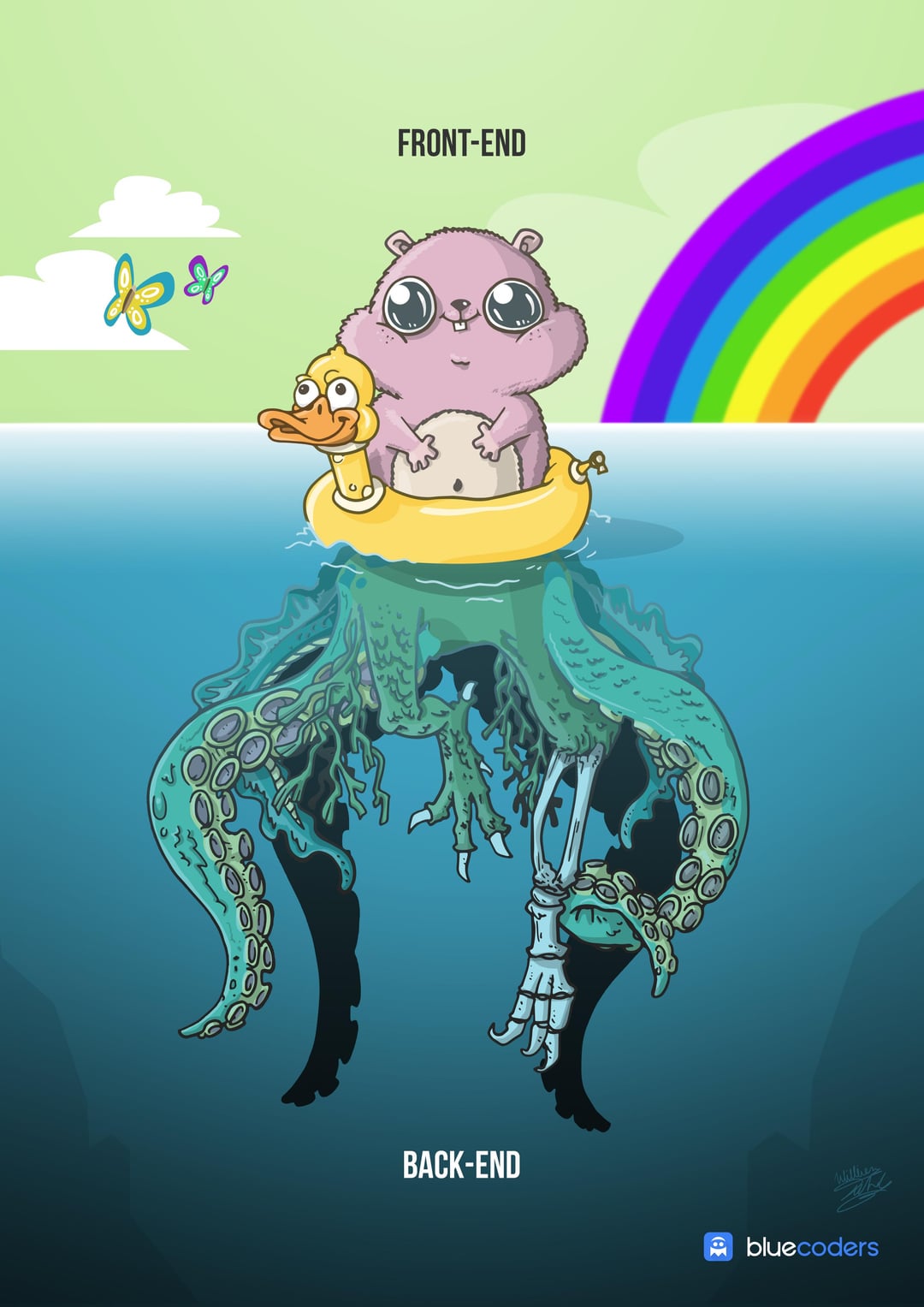

However, if the underlying computational tool has been carefully designed to be able to handle all these details and to be flexible enough to accommodate other eventual and/or unexpected requirements by design, then we say that the tool is “cloud first.” Throughout this SDS we thoroughly explain the features of this particular cloud-first design. Indeed, FeenoX is essentially a back end which can work with a number of different front ends (fig. 1), including these thin clients and web-based interfaces (fig. 2)

Unfair advantage

To better illustrate FeenoX’s unfair advantage (in the entrepreneurial sense), let us first consider what the options are when we need to write a technical report, paper or document:

| Feature | Microsoft Word | Google Docs | Markdown1 | (La)TeX |

|---|---|---|---|---|

| Aesthetics | ❌ | ❌ | ✅ | ✅ |

| Convertibility (to other formats) | 😐 | 😐 | ✅ | 😐 |

| Traceability | ❌ | 😐 | ✅ | ✅ |

| Mobile-friendliness | ❌ | ✅ | ✅ | ❌ |

| Collaborativeness | ❌ | ✅ | ✅ | 😐 |

| Licensing/openness | ❌ | ❌ | ✅ | ✅ |

| Non-nerd friendliness | ✅ | ✅ | 😐 | ❌ |

After analyzing the pros and cons of each alternative, at some point it should be evident that Markdown (plus friends) gives the best trade off. We can then perform a similar analysis for the options available in order to solve an engineering problem casted as a partial differential equation, say by using a finite-element formulation:

| Feature | Desktop GUIs | Web frontends | FeenoX2 | Libraries |

|---|---|---|---|---|

| Flexibility | ❌/😐 | ❌/😐 | ✅ | ✅ |

| Scalability | ❌ | 😐 | ✅ | ✅ |

| Traceability | ❌ | 😐 | ✅ | ✅ |

| Cloud-friendliness | ❌ | ✅ | ✅ | ✅ |

| Collaborativeness | ❌ | ✅ | ✅ | 😐 |

| Licensing/openness | ✅/😐/❌ | ❌ | ✅ | ✅ |

| Non-nerd friendliness | ✅ | ✅ | 😐 | ❌ |

Therefore, FeenoX is—in a certain sense—to desktop FEA programs like

- Code_Aster with Salome-Meca, or

- CalculiX with PrePoMax

and to libraries like

what Markdown is to Word and (La)TeX, respectively and deliberately.

Licensing

FeenoX is licensed under the terms of the GNU General Public License version 3 or, at the user convenience, any later version. This means that users get the four essential freedoms:3

- The freedom to run the program as they wish, for any purpose.

- The freedom to study how the program works, and change it so it does their computing as they wish.

- The freedom to redistribute copies so they can help others.

- The freedom to distribute copies of their modified versions to others.

So a free program has to be open source, but it also has to

explicitly provide the four freedoms above both through the written

license and through appropriate mechanisms to get, modify, compile, run

and document these modifications using well-established and/or

reasonable straightforward procedures. That is why licensing FeenoX as

GPLv3+ also implies that the source code and all the scripts and

makefiles needed to compile and run it are available for anyone that

requires it (i.e. it is compiled with

./configure && make). Anyone wanting to modify the

program either to fix bugs, improve it or add new features is free to do

so. And if they do not know how to program, the have the freedom to hire

a programmer to do it without needing to ask permission to the original

authors. Even more, the

documentation is released under the terms of the Creative Commons

Attribution-ShareAlike 4.0 International License so these new (or

modified) features can be properly documented as well.

Nevertheless, since these original authors are the copyright holders, they still can use it to either enforce or prevent further actions from the users that receive FeenoX under the GPLv3+. In particular, the license allows re-distribution of modified versions only if

- they are clearly marked as different from the original, and

- they are distributed under the same terms of the GPLv3+.

There are also some other subtle technicalities that need not be discussed here such as

- what constitutes a modified version (which cannot be redistributed under a different license)

- what is an aggregate (in which each part be distributed under different licenses)

- usage over a network and the possibility of using AGPL instead of GPL to further enforce freedom

These issues are already taken into account in the FeenoX licensing scheme.

It should be noted that not only is FeenoX free and open source, but also all of the libraries it depends on (and their dependencies) also are. It can also be compiled using free and open source build tool chains running over free and open source operating systems.

To sum up this introduction, FeenoX is…

- a cloud-first computational tool (not just cloud friendly, but cloud first).

- to traditional computational software and to specialized libraries what Markdown is to Word and TeX, respectively.

- both free (as in freedom) and open source.

1.1 Objective

The main objective of the tool is to be able to solve engineering problems which are usually casted as differential-algebraic equations (DAEs) or partial differential equations (PDEs), such as

- heat conduction

- mechanical elasticity

- structural modal analysis

- mechanical frequency studies

- electromagnetism

- chemical diffusion

- process control dynamics

- computational fluid dynamics

- …

on one or more mainstream cloud servers, i.e. computers with hardware and operating systems (further discussed in sec. 2) that allows them to be available online and accessed remotely either interactively or automatically by other computers as well. Other architectures such as high-end desktop personal computers or even low-end laptops might be supported but they should not the main target (i.e. the tool has to be cloud-first but laptop-friendly).

The initial version of the tool must be able to handle a subset of the above list of problem types. Afterward, the set of supported problem types, models, equations and features of the tool should grow to include other models as well, as required in sec. 2.6.

The choice of the initial supported features is based on the types of problem that the FeenoX’s precursor codes (namely wasora, Fino and milonga, referred to as “previous versions” from now on) already have been supporting since more than ten years now. A subsequent road map and release plans can be designed as requested. FeenoX’s first version includes a subset of the required functionality, namely

- open and closed-loop dynamical systems

- Laplace/Poisson/Helmholtz equations

- heat conduction

- mechanical elasticity

- structural modal analysis

- multi-group neutron transport and diffusion

Sec. 2.6 explains the mechanisms that FeenoX provides in order to add (or even remove) other types of problems to be solved.

Recalling that FeenoX is a “cloud-first” tool as explained in sec. 1, it is designed to be developed and executed primarily on GNU/Linux hosts, which is the architecture of more than 90% of the internet servers which we collectively call “the public cloud.” It should be noted that GNU/Linux is a POSIX-compliant operating system which is compatible with Unix, and that FeenoX was designed and implemented following the rules of Unix philosophy which is further explained in sec. 11. Besides the POSIX standard, as explained below in sec. 2.4, FeenoX also uses MPI which is a well-known industry standard for massive execution of parallel processes following the distributed-systems parallelization paradigm. Finally, if performance and/or scalability are not important issues, FeenoX can be run in a (properly cooled) local PC, laptop or even in embedded systems such as Raspberry Pi (see sec. 2).

1.2 Scope

The tool should allow users to define the problem to be solved programmatically. That is to say, the problem should be completely defined using one or more files either…

- specifically formatted for the tool to read such as JSON or a particular input format (historically called input decks in punched-card days), and/or

- written in an high-level interpreted language such as Python or Julia.

Once the problem has been defined and passed on to the solver, no further human intervention should be required.

It should be noted that a graphical user interface is not required. The tool may include one, but it should be able to run without needing any interactive user intervention rather than the preparation of a set of input files. Nevertheless, the tool might allow a GUI to be used. For example, for a basic usage involving simple cases, a user interface engine should be able to create these problem-definition files in order to give access to less advanced users to the tool using a desktop, mobile and/or web-based interface in order to run the actual tool without needing to manually prepare the actual input files.

However, for general usage, users should be able to completely define the problem (or set of problems, i.e. a parametric study) they want to solve in one or more input files and to obtain one or more output files containing the desired results, either a set of scalar outputs (such as maximum stresses or mean temperatures), and/or a detailed time and/or spatial distribution. If needed, a discretization of the domain may to be taken as a known input, i.e. the tool is not required to create the mesh as long as a suitable mesher can be employed using a similar workflow as the one specified in this SRS.

The tool should define and document (sec. 4.4) the way the input files for a solving particular problem are to be prepared (sec. 3.1) and how the results are to be written (sec. 3.2). Any GUI, pre-processor, post-processor or other related graphical tool used to provide a graphical interface for the user should integrate in the workflow described in the preceding paragraph: a pre-processor should create the input files needed for the tool and a post-processor should read the output files created by the tool.

Since FeenoX is designed to be executed in the cloud, it works very much like a transfer function between one (or more) files and zero or more output files:

+------------+

mesh (*.msh) } | | { terminal

data (*.dat) } input ----> | FeenoX |----> output { data files

input (*.fee) } | | { post (vtk/msh)

+------------+Technically speaking, FeenoX can be seen as a Unix filter designed to read an ASCII-based stream of characters (i.e. the input file, which in turn can include other input files or contain instructions to read data from mesh and/or other data files) and to write ASCII-formatted data into the standard output and/or other files. The input file can be prepared either by a human or by another program. The output stream and/or files can be read by either a human and/or another programs. A quotation from Eric Raymond’s The Art of Unix Programming helps to illustrate this idea:

Doug McIlroy, the inventor of Unix pipes and one of the founders of the Unix tradition, had this to say at the time:

Make each program do one thing well. To do a new job, build afresh rather than complicate old programs by adding new features.

Expect the output of every program to become the input to another, as yet unknown, program. Don’t clutter output with extraneous information. Avoid stringently columnar or binary input formats. Don’t insist on interactive input.

[…]

He later summarized it this way (quoted in “A Quarter Century of Unix” in 1994):

- This is the Unix philosophy: Write programs that do one thing and do it well. Write programs to work together. Write programs to handle text streams, because that is a universal interface.

Keep in mind that even though both the quotes above and many finite-element programs that are still mainstream today date both from the early 1970s, fifty years later the latter still

- do not make just only one thing well,

- do complicate old programs by adding new features,

- do not expect their output to become the input to another,

- do clutter output with extraneous information,

- do use stringently columnar and/or binary input (and output!) formats, and/or

- do insist on interactive input.

There are other FEA tools that, even though born closer in time, also follow the above bullets literally. But FeenoX does not, since it follows the Unix philosophy in general and Eric Raymond’s 17 Unix Rules (sec. 11) in particular. One of the main ideas is the rule of separation (sec. 11.4) that essentially asks to separate mechanism from policy, that in the computational engineering world translates into separating the front end from the back end as illustrated in fig. 1.

When solving ordinary differential equations, the usual workflow involves solving them with FeenoX and plotting the results with Gnuplot or Pyxplot. When solving partial differential equations (PDEs), the mesh is created with Gmsh and the output can be post-processed with Gmsh, Paraview or any other post-processing system (even a web-based interface) that follows rule of separation. Even though most FEA programs eventually separate the interface from the solver up to some degree, there are cases in which they are still dependent such that changing the former needs updating the latter. This is the usual case with legacy programs designed back in the 1990s (or even one or two decades before) that are still around nowadays. They usually still fulfill almost all of the bullets above and are the ones which their owners are trying to convert from desktop to cloud-enabled programs instead of starting from scratch.

From the very beginning, FeenoX is designed as a pure back end which should nevertheless provide appropriate mechanisms for different front ends to be able to communicate and to provide a friendly interface for the final user. Yet, the separation is complete in the sense that the nature of the front ends can radically change (say from a desktop-based point-and-click program to a web-based interface or an immersive augmented-reality application with goggles) without needing the modify the back end. Not only far more flexibility is given by following this path, but also develop efficiency and quality is encouraged since programmers working on the lower-level of an engineering tool usually do not have the skills needed to write good user-experience interfaces, and conversely.

In the very same sense, FeenoX does not discretize continuous domains for PDE problems itself, but relies on separate tools for this end. Fortunately, there already exists one meshing tool which is FOSS (GPLv2) and shares most (if not all) of the design basis principles with FeenoX: the three-dimensional finite element mesh generator Gmsh.

Strictly speaking, FeenoX does not need to be used along with Gmsh

but with any other mesher able to write meshes in Gmsh’s format

.msh. But since Gmsh also

- is free and open source,

- works also in a transfer-function-like fashion,

- runs natively on GNU/Linux,

- has a similar (but more comprehensive) API for Python/Julia,

- etc.

it is a perfect match for FeenoX. Even more, it provides suitable domain decomposition methods (through other open-source third-party libraries such as Metis) for scaling up large problems.

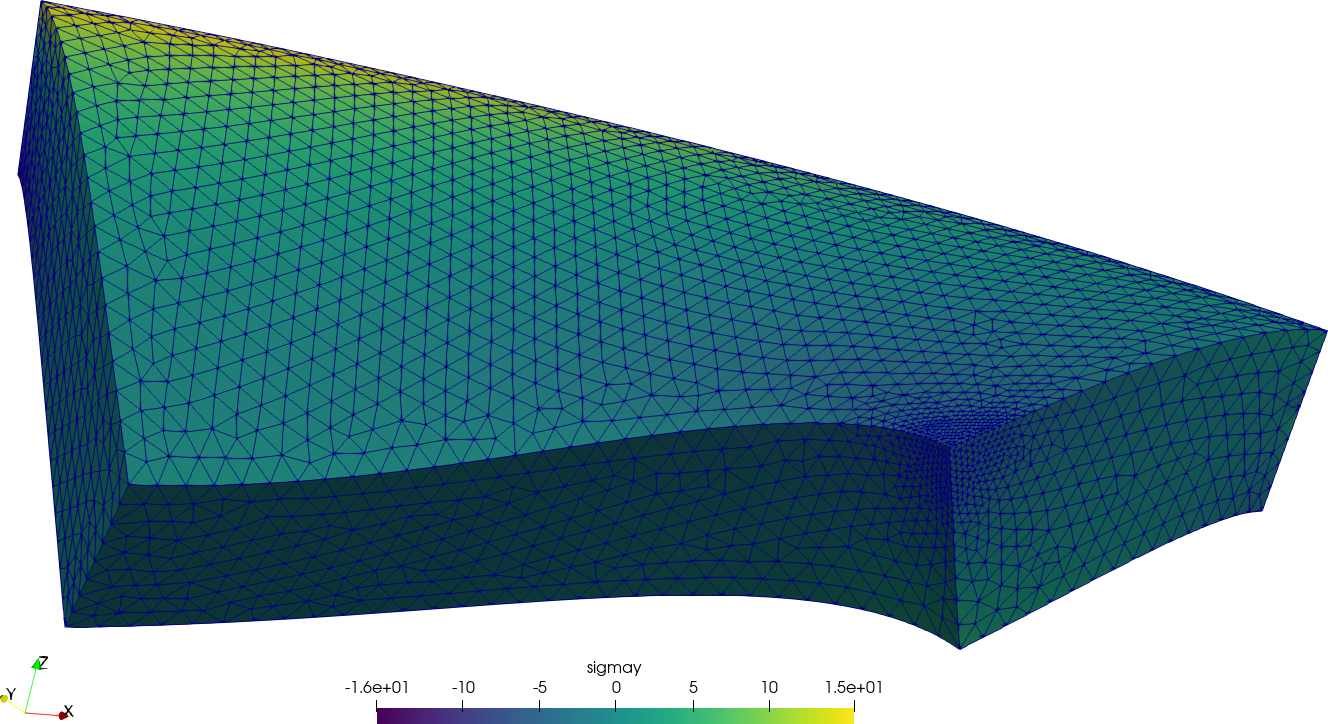

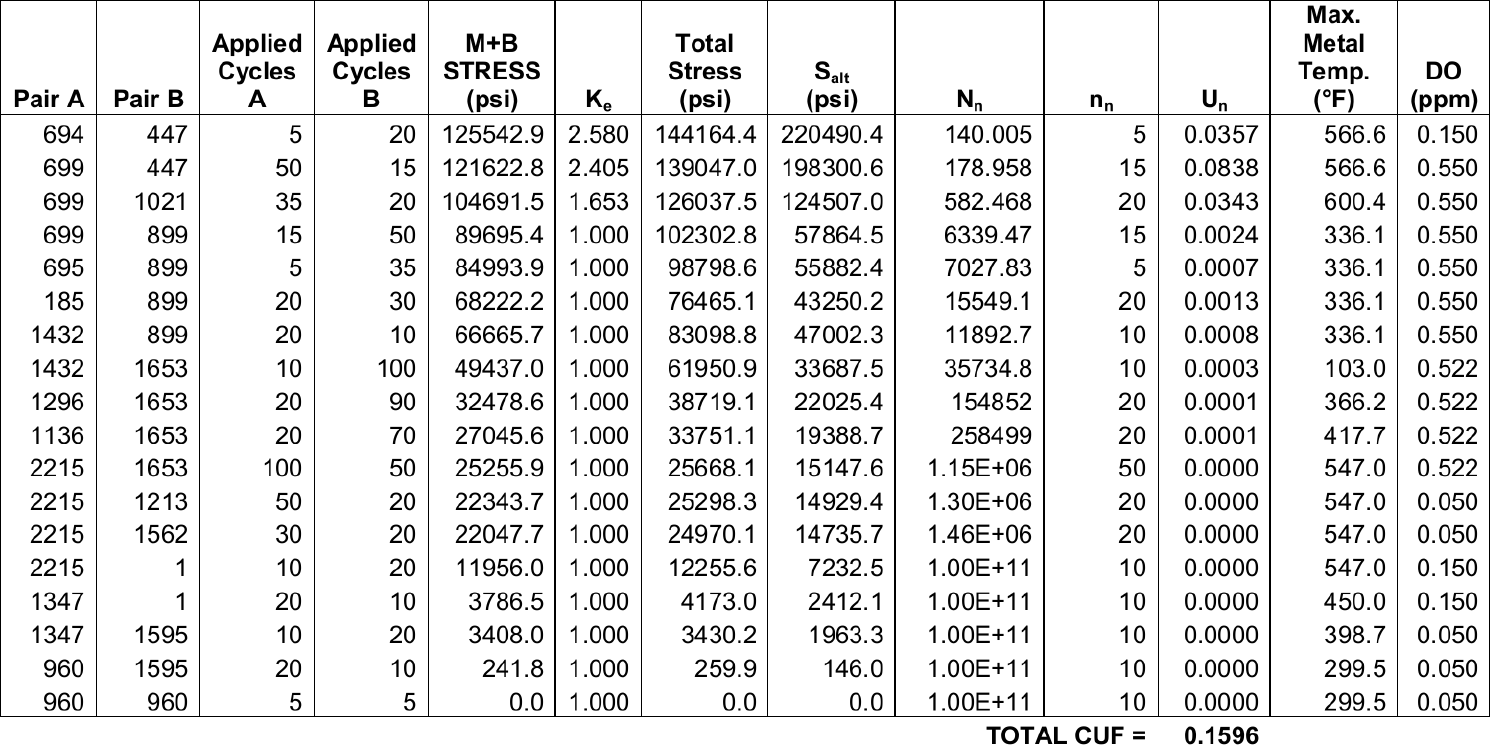

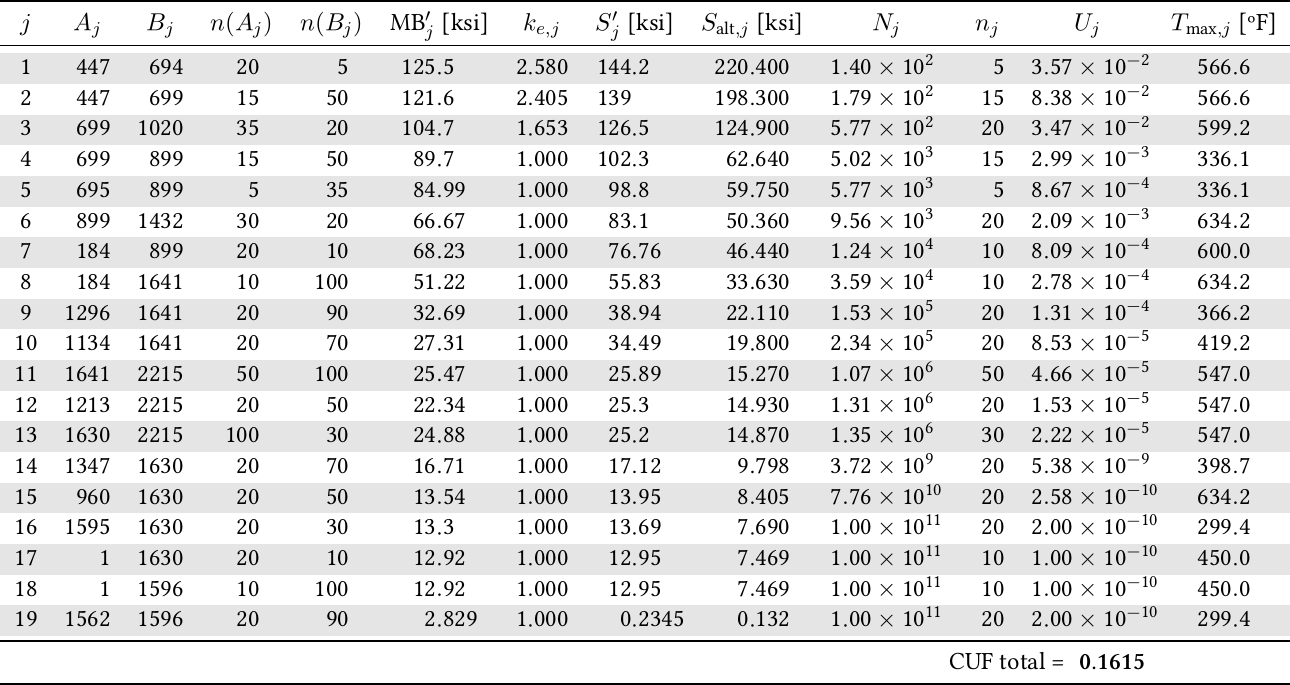

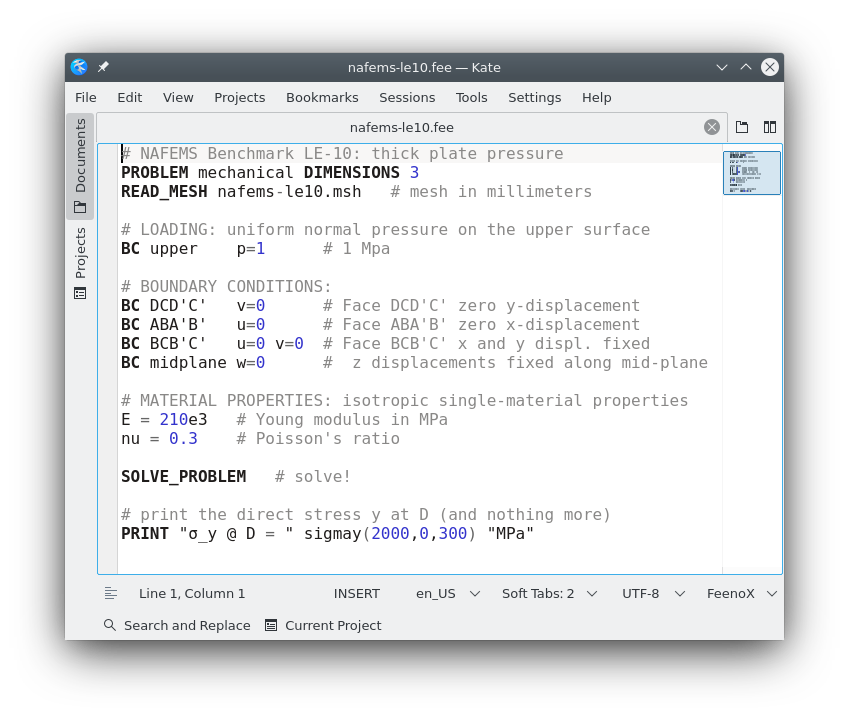

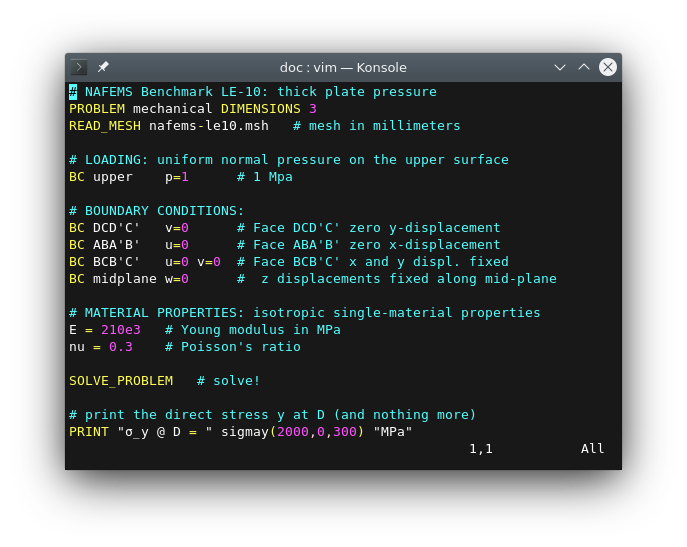

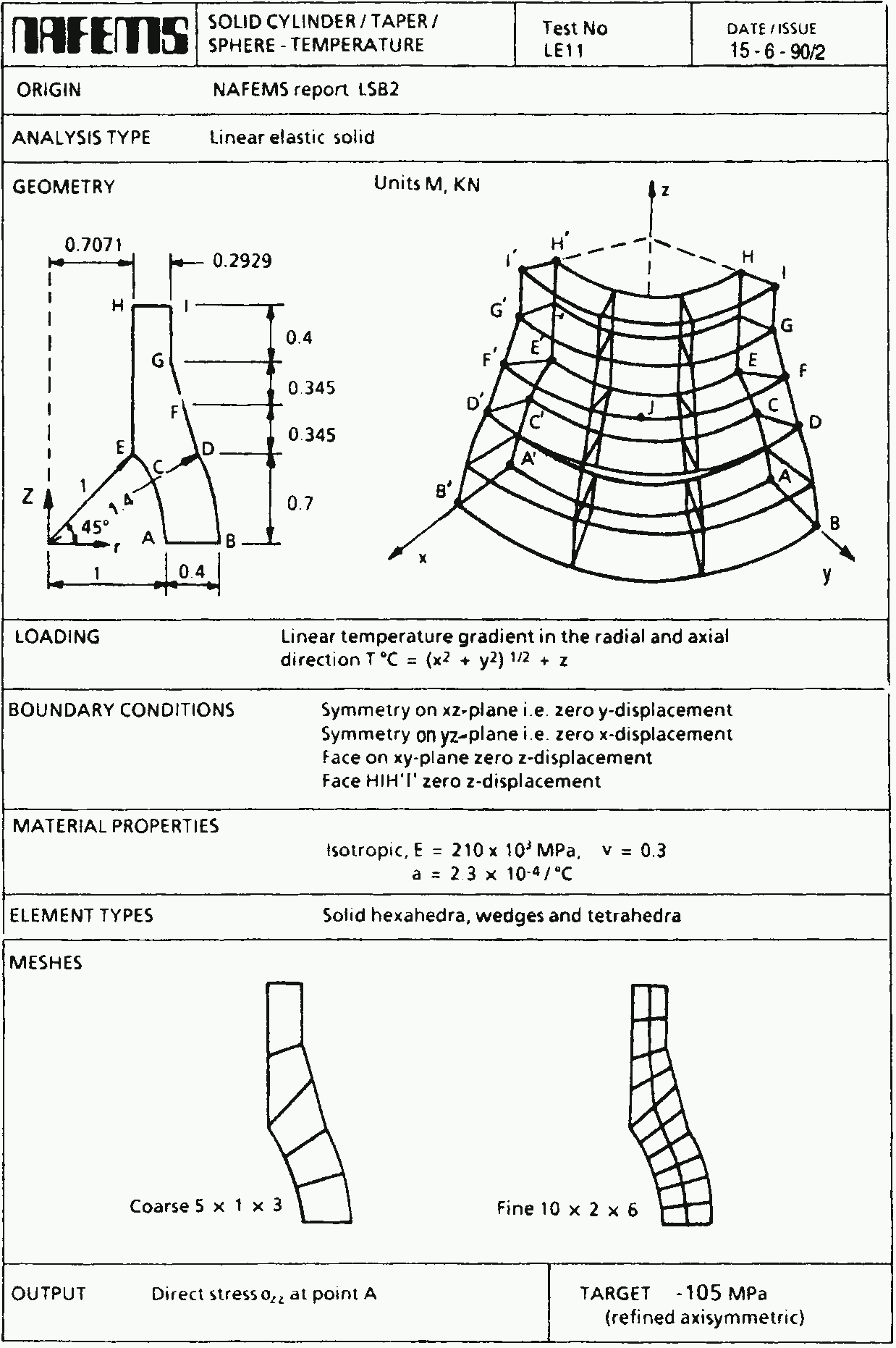

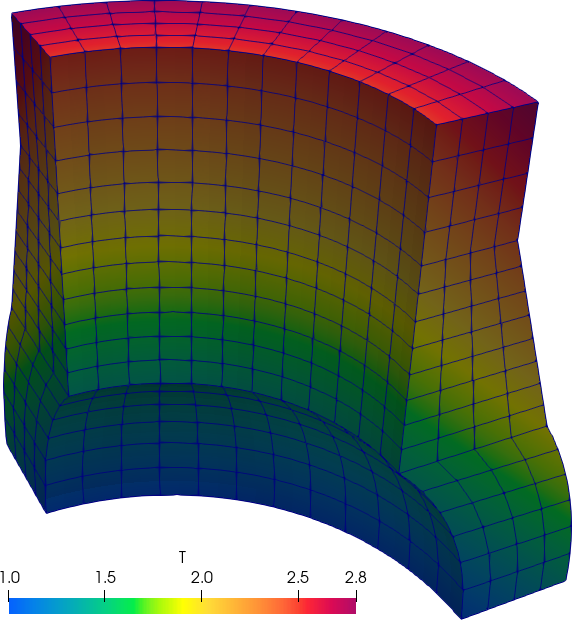

1.2.1 NAFEMS LE10 benchmark

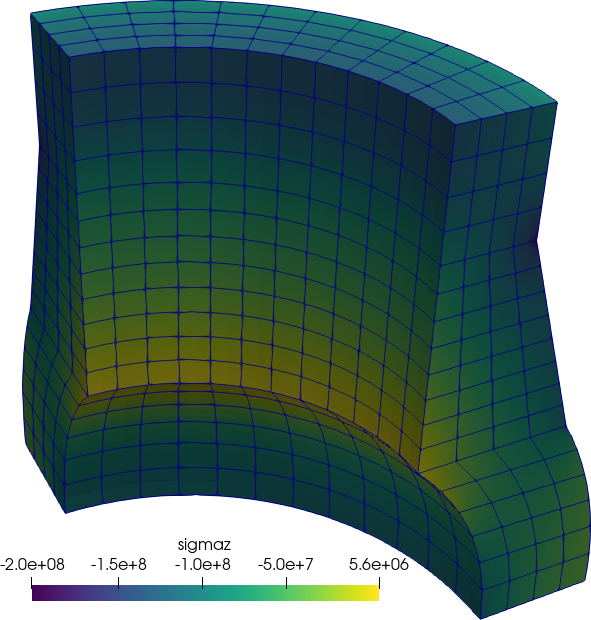

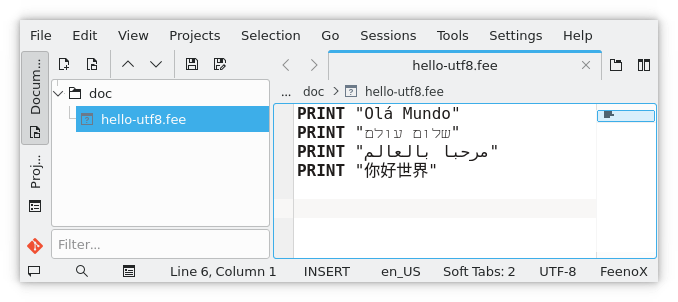

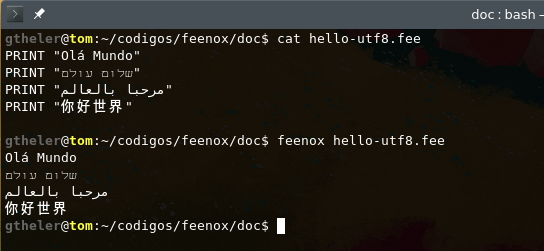

Let us solve the linear elasticity benchmark problem NAFEMS LE10 “Thick plate pressure.” with FeenoX. Note the one-to-one correspondence between the human-friendly problem statement from fig. 3 and the FeenoX input file:

# NAFEMS Benchmark LE-10: thick plate pressure

PROBLEM mechanical MESH nafems-le10.msh # mesh in millimeters

# LOADING: uniform normal pressure on the upper surface

BC upper p=1 # 1 Mpa

# BOUNDARY CONDITIONS:

BC DCD'C' v=0 # Face DCD'C' zero y-displacement

BC ABA'B' u=0 # Face ABA'B' zero x-displacement

BC BCB'C' u=0 v=0 # Face BCB'C' x and y displ. fixed

BC midplane w=0 # z displacements fixed along mid-plane

# MATERIAL PROPERTIES: isotropic single-material properties

E = 210 * 1e3 # Young modulus in MPa

nu = 0.3 # Poisson's ratio

# print the direct stress y at D (and nothing more)

PRINTF "σ_y @ D = %.4f MPa" sigmay(2000,0,300)Here, “one-to-one” means that the input file does not need any extra definition which is not part of the problem formulation. Of course the cognizant engineer can give further definitions such as

- the linear solver and pre-conditioner

- the tolerances for iterative solvers

- options for computing stresses out of displacements

- etc.

However, she is not obliged to as–at least for simple problems—the defaults are reasonable. This is akin to writing a text in Markdown where one does not need to care if the page is A4 or letter (as, in most cases, the output will not be printed but rendered in a web browser).

The problem asks for the normal stress in the y direction \sigma_y at point “D,” which is what FeenoX writes (and nothing else, rule of economy):

$ feenox nafems-le10.fee

sigma_y @ D = -5.38016 MPa

$ Also note that since there is only one material, there is no need to do an explicit link between material properties and physical volumes in the mesh (rule of simplicity). And since the properties are uniform and isotropic, a single global scalar for E and a global single scalar for \nu are enough.

For the sake of visual completeness, post-processing data with the scalar distribution of \sigma_y and the vector field of displacements [u,v,w] can be created by adding one line to the input file:

WRITE_MESH nafems-le10.vtk sigmay VECTOR u v wThis VTK file can then be post-processed to create interactive 3D views, still screenshots, browser and mobile-friendly webGL models, etc. In particular, using Paraview one can get a colorful bitmapped PNG (the displacements are far more interesting than the stresses in this problem).

1.2.2 The Lorenz chaotic system

Let us consider the famous chaotic Lorenz’s dynamical system. Here is one way of getting an image of the butterfly-shaped attractor using FeenoX to compute it and Gnuplot to draw it. Solve

\begin{cases} \dot{x} &= \sigma \cdot (y - x) \\ \dot{y} &= x \cdot (r - z) - y \\ \dot{z} &= x y - b z \\ \end{cases}

for 0 < t < 40 with initial conditions

\begin{cases} x(0) = -11 \\ y(0) = -16 \\ z(0) = 22.5 \\ \end{cases}

and \sigma=10, r=28 and b=8/3, which are the classical parameters that generate the butterfly as presented by Edward Lorenz back in his seminal 1963 paper Deterministic non-periodic flow.

The following ASCII input file resembles the parameters, initial conditions and differential equations of the problem as naturally as possible:

PHASE_SPACE x y z # Lorenz attractor’s phase space is x-y-z

end_time = 40 # we go from t=0 to 40 non-dimensional units

sigma = 10 # the original parameters from the 1963 paper

r = 28

b = 8/3

x_0 = -11 # initial conditions

y_0 = -16

z_0 = 22.5

# the dynamical system's equations written as naturally as possible

x_dot = sigma*(y - x)

y_dot = x*(r - z) - y

z_dot = x*y - b*z

PRINT t x y z # four-column plain-ASCII outputIndeed, when executing FeenoX with this input file, we get four ASCII columns (t, x, y and z) which we can then redirect to a file and plot it with a standard tool such as Gnuplot. Note the importance of relying on plain ASCII text formats both for input and output, as recommended by the Unix philosophy and the rule of composition: other programs can easily create inputs for FeenoX and other programs can easily understand FeenoX’s outputs. This is essentially how Unix filters and pipes work.

Note the one-to-one correspondence between the human-friendly differential equations (written in TeX and rendered as typesetted mathematical symbols) and the computer-friendly input file that FeenoX reads.

Even though the initial version of FeenoX does not provide an API for high-level interpreted languages such as Python or Julia, the code is written in such a way that this feature can be added without needing a major refactoring. This will allow to fully define a problem in a procedural way, increasing also flexibility.

2 Architecture

The tool must be aimed at being executed unattended on remote servers which are expected to have a mainstream (as of the 2020s) architecture regarding operating system (GNU/Linux variants and other Unix-like OSes) and hardware stack, such as

- a few Intel-compatible or ARM-like CPUs per host

- a few levels of memory caches

- a few gigabytes of random-access memory

- several gigabytes of solid-state storage

It should successfully run on

- bare-metal

- virtual servers

- containerized images

using standard compilers, dependencies and libraries already available in the repositories of most current operating systems distributions.

Preference should be given to open source compilers, dependencies and libraries. Small problems might be executed in a single host but large problems ought to be split through several server instances depending on the processing and memory requirements. The computational implementation should adhere to open and well-established parallelization standards.

Ability to run on local desktop personal computers and/laptops is not required but suggested as a mean of giving the opportunity to users to test and debug small coarse computational models before launching the large computation on a HPC cluster or on a set of scalable cloud instances. Support for non-GNU/Linux operating systems is not required but also suggested.

Mobile platforms such as tablets and phones are not suitable to run engineering simulations due to their lack of proper electronic cooling mechanisms. They are suggested to be used to control one (or more) instances of the tool running on the cloud, and even to pre and post process results through mobile and/or web interfaces.

Very much like the C language (after A & B) and Unix itself (after a first attempt and the failed MULTICS), FeenoX can be seen as a third-system effect:

A notorious ‘second-system effect’ often afflicts the successors of small experimental prototypes. The urge to add everything that was left out the first time around all too frequently leads to huge and overcomplicated design. Less well known, because less common, is the ‘third-system effect’: sometimes, after the second system has collapsed of its own weight, there is a chance to go back to simplicity and get it right.

Feenox is indeed the third version written from scratch after a first implementation in 2009 (different small components with different names) and a second one (named wasora that allowed dynamically-shared plugins to be linked at runtime to provide particular PDEs) which was far more complex and had far more features circa 2012–2015. The third attempt, FeenoX, explicitly addresses the “do one thing well” idea from Unix.

Furthermore, not only is FeenoX itself both free and open-source software but, following the rule of composition (sec. 11.3), it also is designed to connect and to work with other free and open source software such as

- Gmsh for pre and/or post-processing

- ParaView for post-processing

- Gnuplot for plotting 1D/2D results

- Pyxplot for plotting 1D results

- Pandoc for creating tables and documents

- TeX for creating tables and documents

and many others, which are readily available in all major GNU/Linux distributions.

FeenoX also makes use of high-quality free and open source mathematical libraries which contain numerical methods designed by mathematicians and implemented by professional programmers. In particular, it depends on

- GNU Scientific Library for general mathematics,

- SUNDIALS IDA for ODEs and DAEs,

- PETSc for linear, non-linear and transient PDEs, and

- SLEPc for PDEs involving eigen problems

Therefore, if one zooms in into the block of the transfer function above, FeenoX can also be seen as a glue layer between the input files defining a physical problem and the mathematical libraries used to solve the discretized equations. For example, when solving the linear elastic problem from the NAFEMS LE10 case discussed above, we can draw the following diagram:

This way, FeenoX bounds its scope to do only one thing and to do it well: to build and solve finite-element formulations of physical problems. And it does so on high grounds, both ethical and technological:

Ethical, since it is free software, all users can

- run,

- share,

- modify, and/or

- re-share their modifications.

If a user cannot read or write code to make FeenoX suit her needs, at least she has the freedom to hire someone to do it for her.

Technological, since it is open source, advanced users can detect and correct bugs and even improve the algorithms. Given enough eyeballs, all bugs are shallow.

FeenoX’s main development architecture is Debian GNU/Linux running over 64-bits Intel-compatible processors (but binaries for ARM architectures can be compiled as well). All the dependencies are free and/or open source and already available in Debian’s latest stable official repositories, as explained in sec. 2.1.

The POSIX standard is followed whenever possible, allowing thus

FeenoX to be compiled in other operating systems and architectures such

as Windows (using Cygwin) and

MacOS. The build procedure is the well-known and mature

./configure && make command.

FeenoX is written in C conforming to the ISO C99 specification (plus POSIX extensions), which is a standard, mature and widely supported language with compilers for a wide variety of architectures. As listed above, for its basic mathematical capabilities, FeenoX uses the GNU Scientific Library. For solving ODEs/DAEs, FeenoX relies on Lawrence Livermore’s SUNDIALS library. For PDEs, FeenoX uses Argonne’s PETSc library and Universitat Politècnica de València’s SLEPc library. All of them are

- free and open source,

- written in C (neither Fortran nor C++),

- mature and stable,

- actively developed and updated,

- very well known both in the industry and academia.

Moreover, PETSc and SLEPc are scalable through the MPI standard, further discussed in sec. 2.4. This means that programs using both these libraries can run on either large high-performance supercomputers or low-end laptops. FeenoX has been run on

- Raspberry Pi

- Laptop (GNU/Linux & Windows 10)

- Macbook

- Desktop PC

- Bare-metal servers

- Vagrant/Virtualbox virtual machines

- Docker/Kubernetes containers

- AWS/DigitalOcean/Contabo instances

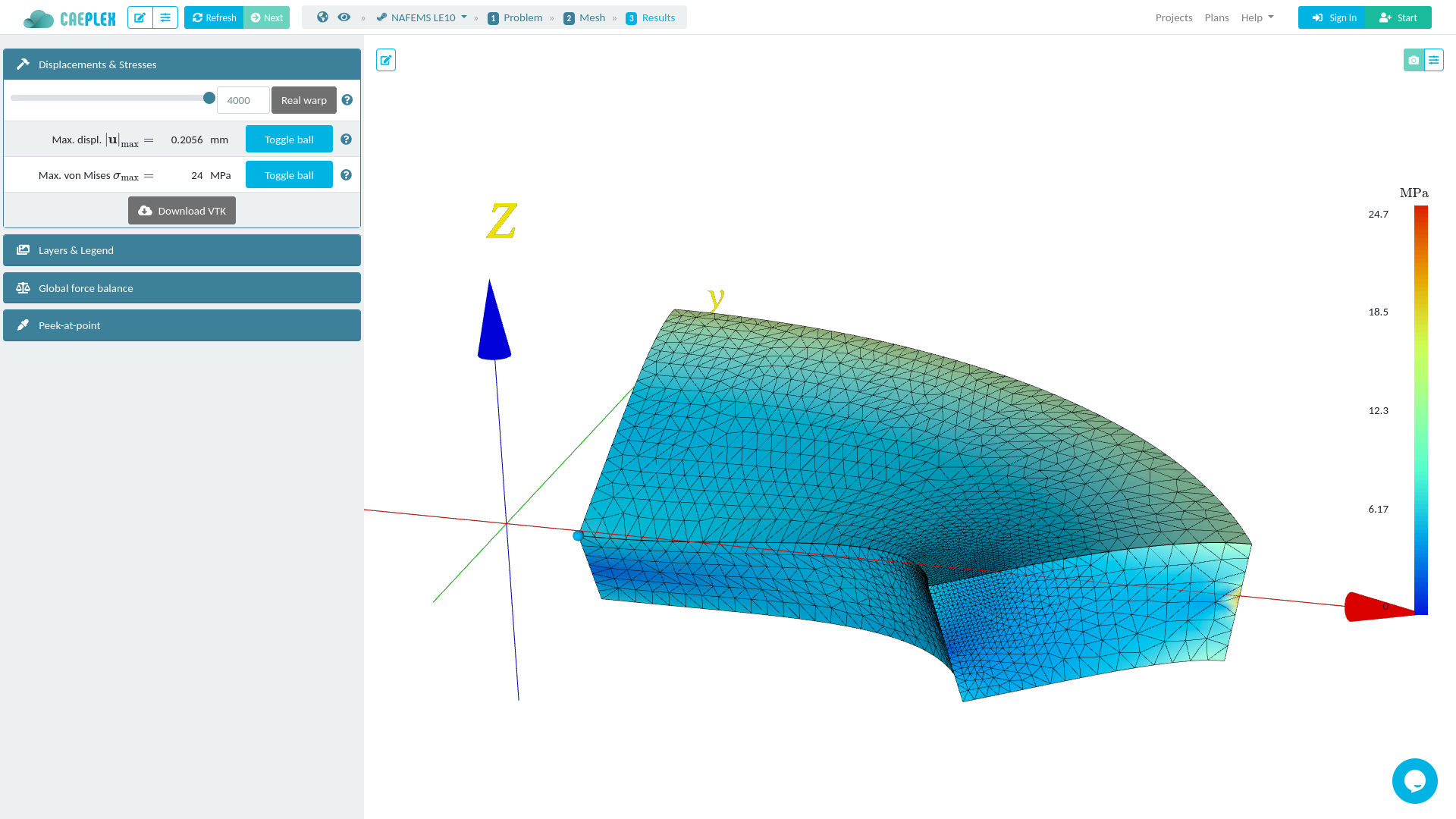

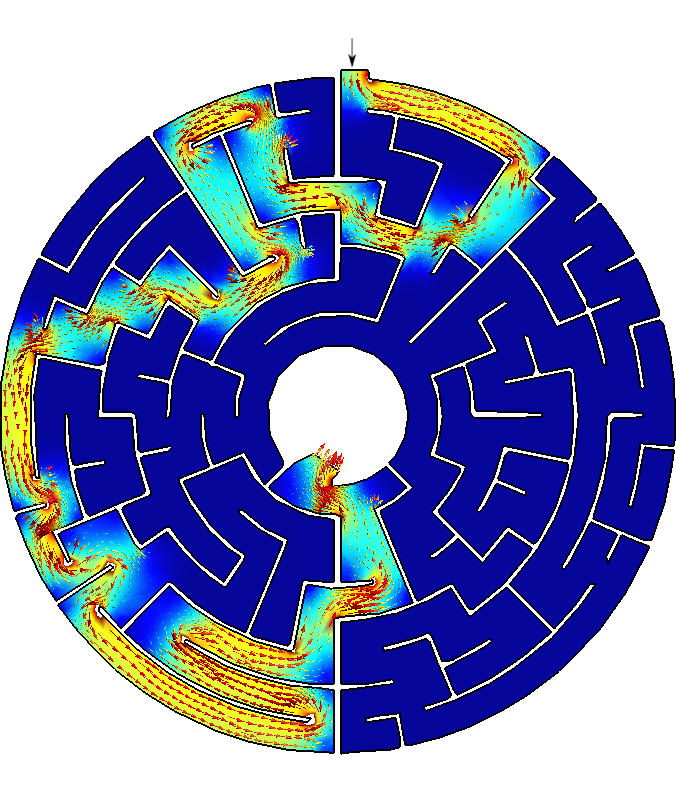

Due to the way that FeenoX is designed and the policy separated from the mechanism, it is possible to control a running instance remotely from a separate client which can eventually run on a mobile device (fig. 2,fig. 5).

The following example illustrates how well FeenoX works as one of many links in a chain that goes from tracing a bitmap with the problem’s geometry down to creating a nice figure with the results of a computation.

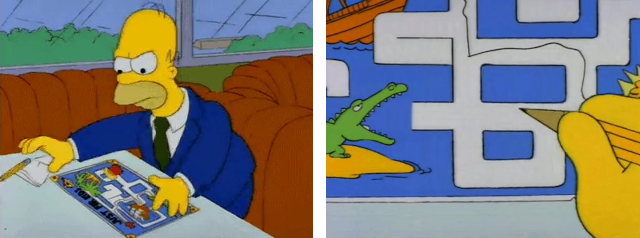

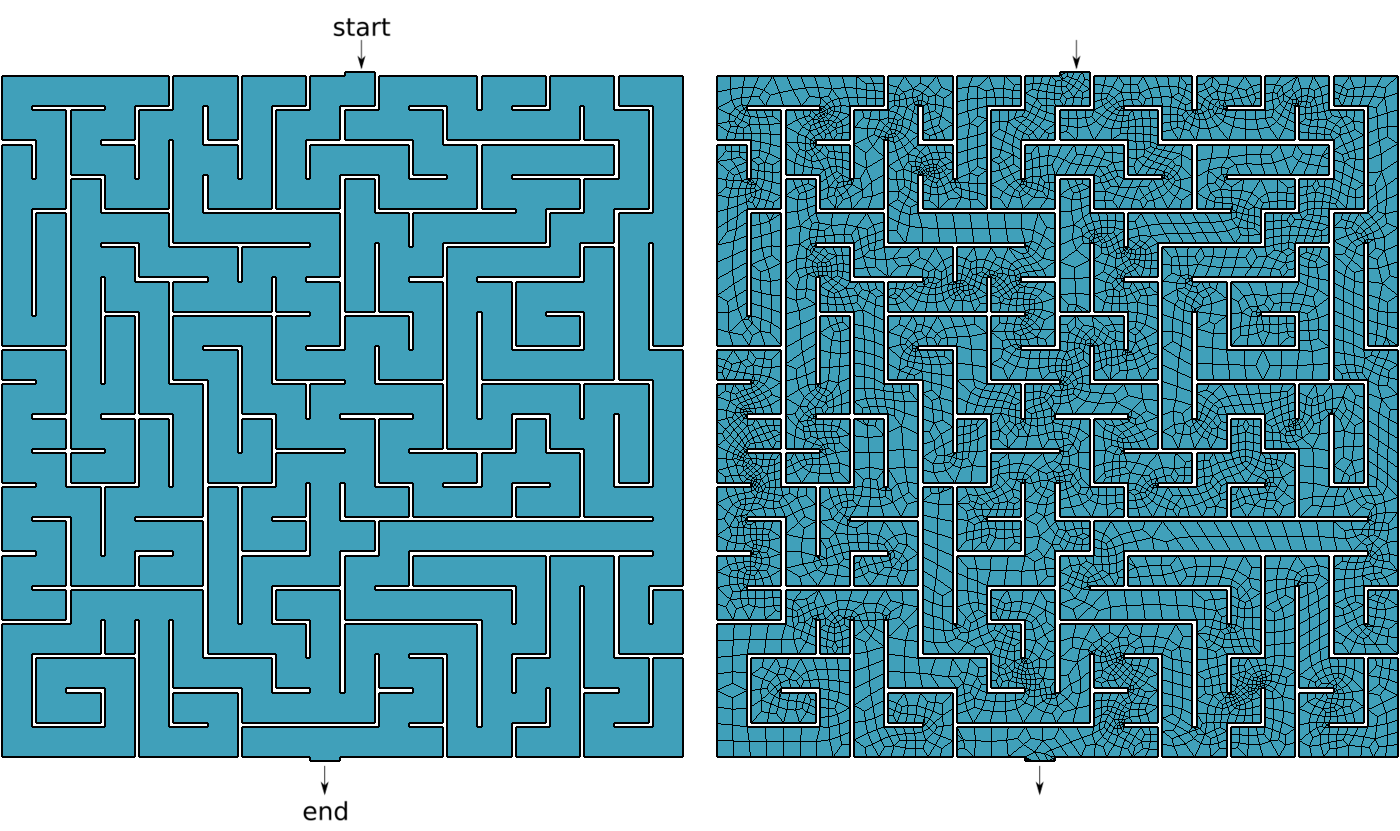

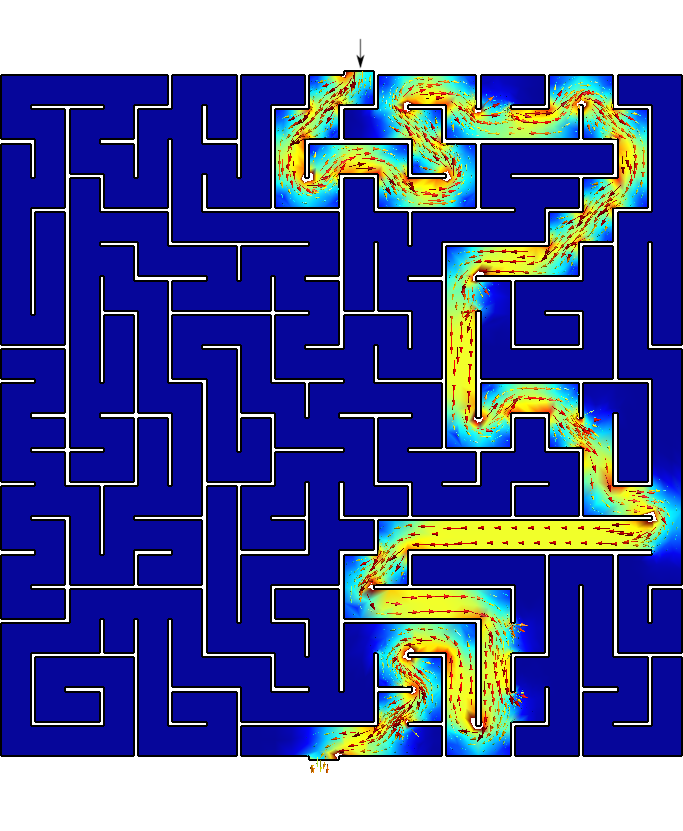

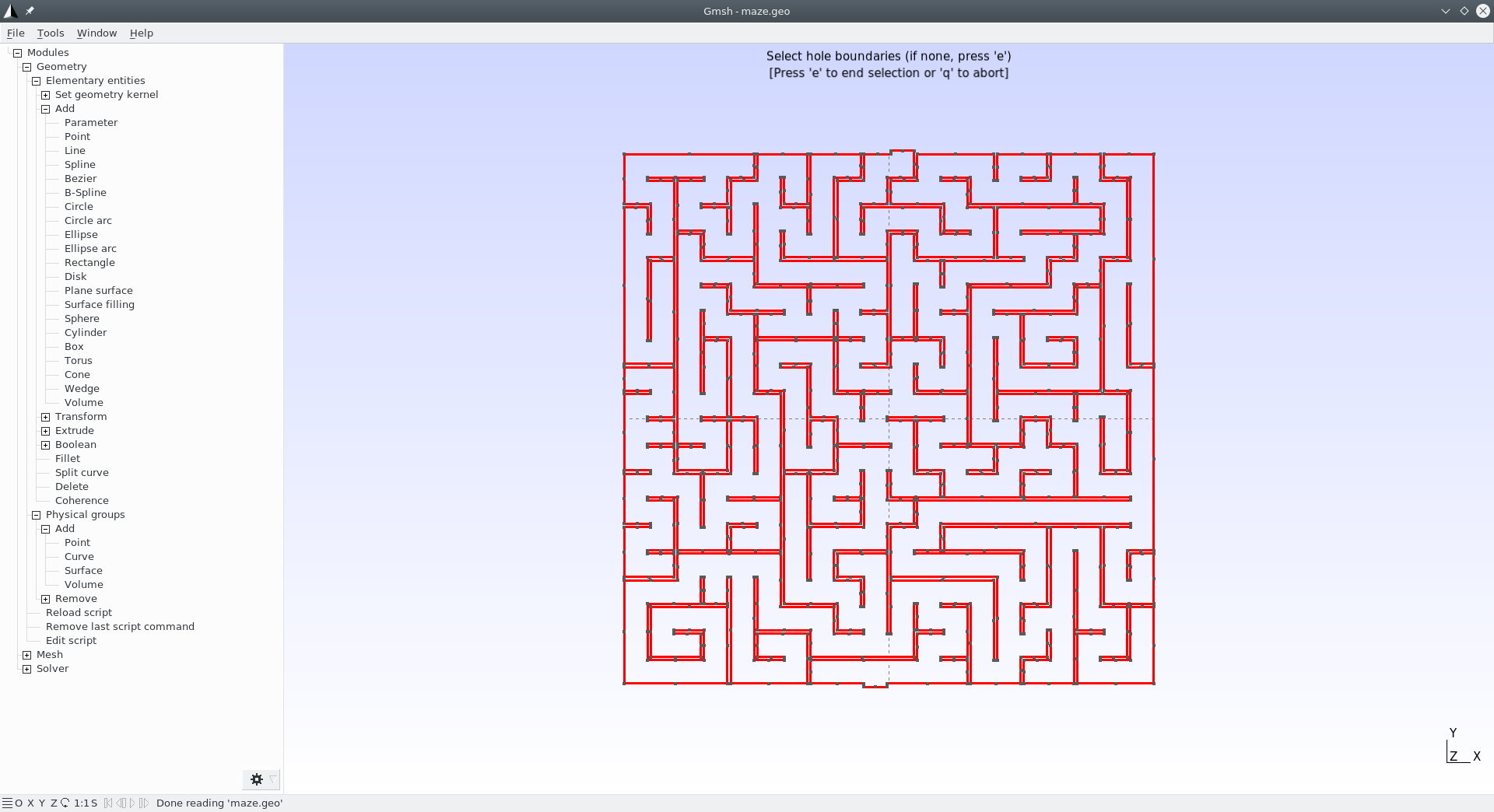

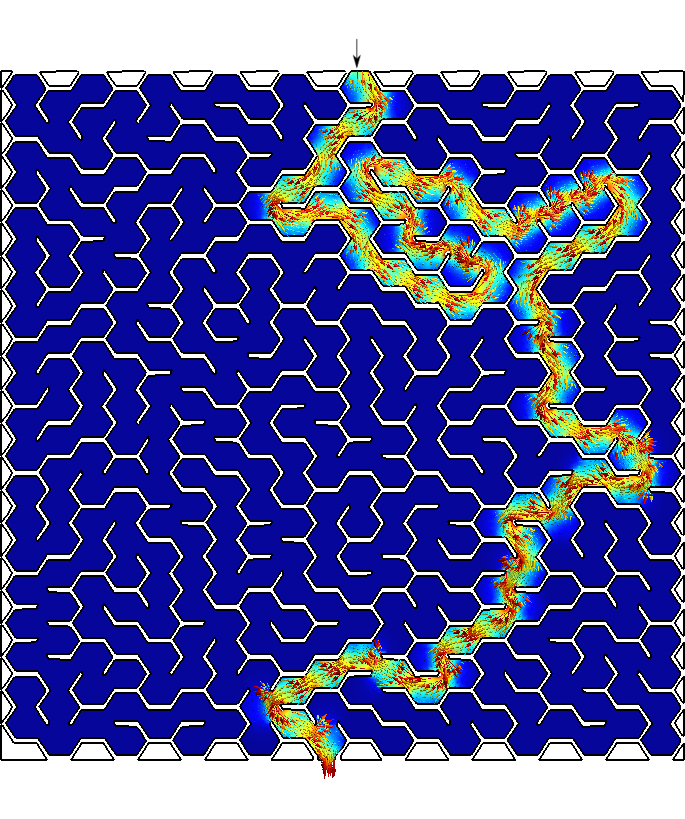

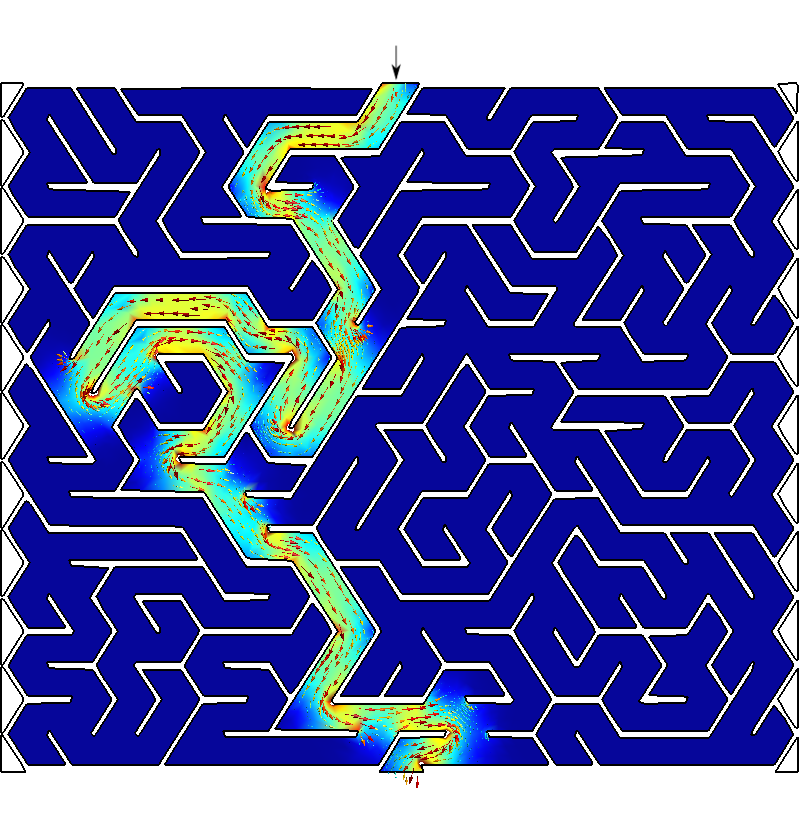

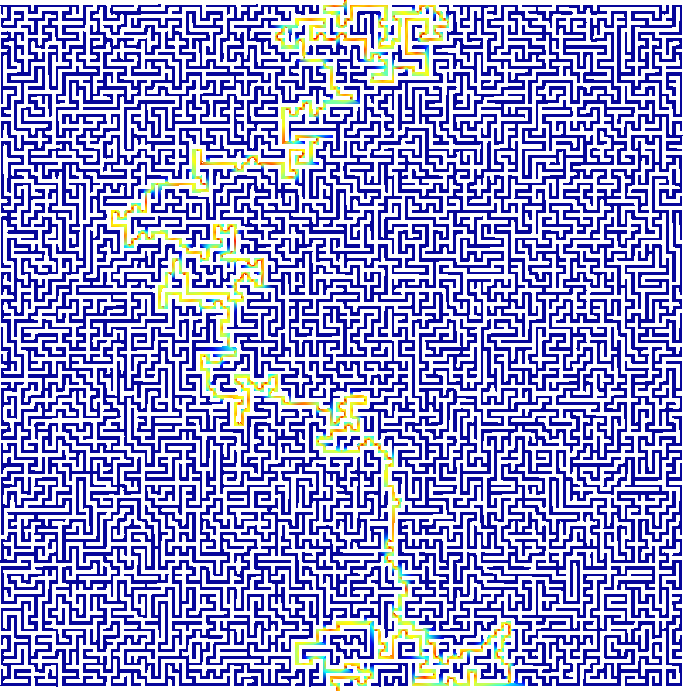

Say you are Homer J. Simpson and you want to solve a maze drawn in a restaurant’s placemat while driving to your wife’s aunt funeral. One where both the start and end points are known beforehand as show in fig. 7. In order to avoid falling into the alligator’s mouth, you can exploit the ellipticity of the Laplacian operator to solve any maze (even a hand-drawn one) without needing any fancy AI or ML algorithm. Just FeenoX and a bunch of standard open source tools to convert a bitmapped picture of the maze into an unstructured mesh.

Figure 8: Bitmapped, meshed and solved mazes.. a — Bitmapped maze from https://www.mazegenerator.net (left) and 2D mesh (right), b — Solution to found by FeenoX (and drawn by Gmsh)

Create a maze

Download it in PNG (fig. 8 (a))

Perform some conversions

- PNG \rightarrow PNM \rightarrow SVG \rightarrow DXF \rightarrow GEO

$ wget http://www.mazegenerator.net/static/orthogonal_maze_with_20_by_20_cells.png $ convert orthogonal_maze_with_20_by_20_cells.png -negate maze.png $ potrace maze.pnm --alphamax 0 --opttolerance 0 -b svg -o maze.svg $ ./svg2dxf maze.svg maze.dxf $ ./dxf2geo maze.dxf 0.1Open it with Gmsh

- Add a surface

- Set physical curves for “start” and “end”

Mesh it (fig. 8 (a))

gmsh -2 maze.geoSolve \nabla^2 \phi = 0 with BCs

\begin{cases} \phi=0 & \text{at “start”} \\ \phi=1 & \text{at “end”} \\ \nabla \phi \cdot \hat{\vec{n}} = 0 & \text{everywhere else} \\ \end{cases}

PROBLEM laplace 2D # pretty self-descriptive, isn't it? READ_MESH maze.msh # boundary conditions (default is homogeneous Neumann) BC start phi=0 BC end phi=1 SOLVE_PROBLEM # write the norm of gradient as a scalar field # and the gradient as a 2d vector into a .msh file WRITE_MESH maze-solved.msh \ sqrt(dphidx(x,y)^2+dphidy(x,y)^2) \ VECTOR dphidx dphidy 0$ feenox maze.fee $Open

maze-solved.msh, go to start and follow the gradient \nabla \phi!

Figure 9: Any arbitrary maze (even hand-drawn) can be solved with FeenoX.

2.1 Deployment

The tool should be easily deployed to production servers. Both

- an automated method for compiling the sources from scratch aiming at obtaining optimized binaries for a particular host architecture should be provided using a well-established procedures, and

- one (or more) generic binary version aiming at common server architectures should be provided.

Either option should be available to be downloaded from suitable online sources, either by real people and/or automated deployment scripts.

As already stated, FeenoX can be compiled from its sources using the

well-established configure & make

procedure. The code’s source tree is hosted on Github so cloning the

repository is the preferred way to obtain FeenoX, but source tarballs

are periodically released too according to the requirements in sec. 4.1.

There are also non-official binary .deb packages which can

be installed with apt using a custom package repository

location.

The configuration and compilation is based on GNU Autotools that has more than thirty years of maturity and it is the most portable way of compiling C code in a wide variety of Unix variants. It has been tested with

- GNU C compiler (free)

- LLVM Clang compiler (free)

- Intel oneAPI C compiler (privative)

FeenoX depends on the four open source libraries stated in sec. 2,

although the last three of them are optional. The only mandatory library

is the GNU Scientific Library which is part of the GNU/Linux operating

system and as such is readily available in all distributions as

libgsl-dev. The sources of the rest of the optional

libraries are also widely available in most common GNU/Linux

distributions.

In effect, doing

sudo apt-get install gcc make libgsl-dev libsundials-dev petsc-dev slepc-devis enough to provision all the dependencies needed compile FeenoX from the source tarball with the full set of features. If using the Git repository as a source, then Git itself and the GNU Autoconf and Automake packages are also needed:

sudo apt-get install git autoconf automakeEven though compiling FeenoX from sources is the recommended way to

obtain the tool—since the target binary can be compiled using

particularly suited compilation options, flags and optimizations

(especially those related to MPI, linear algebra kernels and direct

and/or iterative sparse solvers)–there are also tarballs and

.deb packages with usable binaries for some of the most

common architectures—including some non-GNU/Linux variants. These binary

distributions contain statically-linked executable files that do not

need any other shared libraries to be installed on the target host.

However, their flexibility and efficiency is generic and far from ideal.

Yet the flexibility of having an execution-ready distribution package

for users that do not know how to compile C source code outweighs the

limited functionality and scalability of the tool.

For example, first PETSc can be built with a -Ofast

flag:

$ cd $PETSC_DIR

$ export PETSC_ARCH=linux-fast

$ ./configure --with-debug=0 COPTFLAGS="-Ofast"

$ make -j8

$ cd $HOMEAnd then not only can FeenoX be configured to use that particular

PETSc build but also to use a different compiler such as Clang instead

of GNU GCC and to use the same -Ofast flag to compile

FeenoX itself:

$ git clone https://github.com/seamplex/feenox

$ cd feenox

$ ./autogen.sh

$ export PETSC_ARCH=linux-fast

$ ./configure MPICH_CC=clang CFLAGS=-Ofast

$ make -j8

# make installIf one does not care about the details of the compilation, then a pre-compiled statically-linked binary can be directly downloaded very much as when downloading Gmsh:

$ wget http://gmsh.info/bin/Linux/gmsh-Linux64.tgz

$ wget https://seamplex.com/feenox/dist/linux/feenox-linux-amd64.tar.gzAppendix sec. 13 has more details about how to download and compile FeenoX. The full online documentation contains a compilation guide with further detailed explanations of each of the steps involved.

All the commands needed to either download a binary executable or to

compile from source with customized optimization flags can be automated.

The repository contains a subdirectory dist

with instructions and scripts to build

- source tarballs

- binary tarballs

- Debian-compatible

.debpackages

This way, deployment of the solver can be customized and tweaked as needed, including creating Docker containers with a working version of FeenoX.

2.2 Execution

It is mandatory to be able to execute the tool remotely, either with a direct action from the user or from a high-level workflow which could be triggered by a human or by an automated script. Since it is required for the tool to be able to be run distributed among different servers, proper means to perform this kind of remote executions should be provided. The calling party should be able to monitor the status during run time and get the returned error level after finishing the execution.

The tool shall provide means to perform parametric computations by varying one or more problem parameters in a certain prescribed way such that it can be used as an inner solver for an outer-loop optimization tool. In this regard, it is desirable that the tool could compute scalar values such that the figure of merit being optimized (maximum temperature, total weight, total heat flux, minimum natural frequency, maximum displacement, maximum von Mises stress, etc.) is already available without needing further post-processing.

As requested by the SRS and explained in sec. 1.2, FeenoX is a program that reads the problem to be solved at run-time and not a library that has to be linked against code that defines the problem. Since FeenoX is designed to run as

- a Unix filter, or

- as a transfer function between input and output files

and it explicitly avoids having a graphical interface, the binary

executable works as any other Unix terminal command. Moreover, as

discussed in sec. 2.4, FeenoX uses the MPI standard for parallelization

among several hosts. Therefore, it can be launched through the command

mpiexec

(or

mpirun).

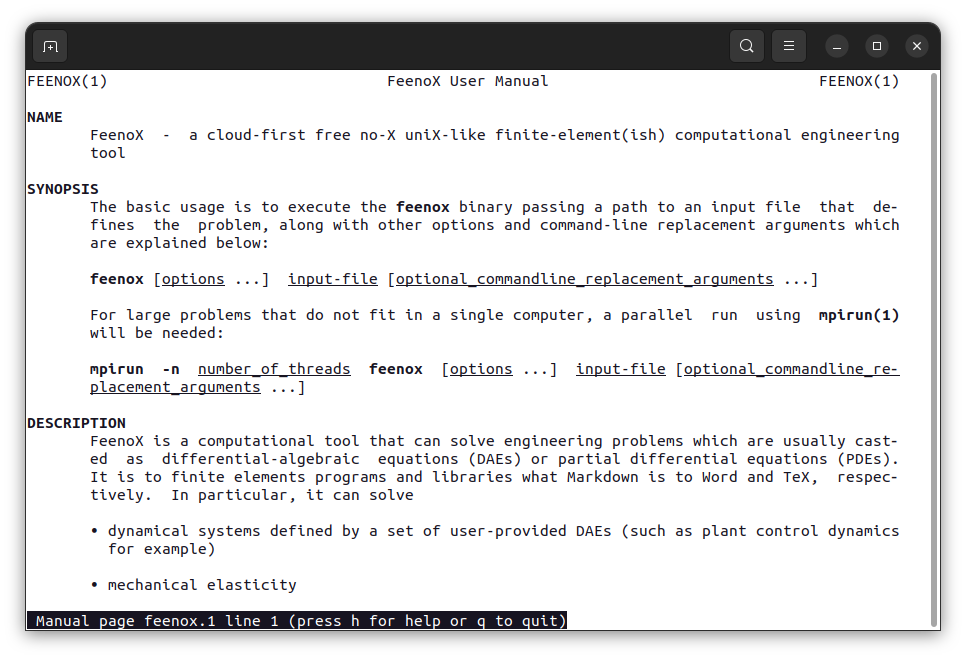

When invoked without arguments, it prints its version (a thorough explanation of the versioning scheme is given in sec. 4.1), a one-line description and the usage options:

$ feenox

FeenoX v1.0.8-g731ca5d

a cloud-first free no-fee no-X uniX-like finite-element(ish) computational engineering tool

usage: feenox [options] inputfile [replacement arguments] [petsc options]

-h, --help display options and detailed explanations of command-line usage

-v, --version display brief version information and exit

-V, --versions display detailed version information

-c, --check validates if the input file is sane or not

--pdes list the types of PROBLEMs that FeenoX can solve, one per line

--elements_info output a document with information about the supported element types

--linear force FeenoX to solve the PDE problem as linear

--non-linear force FeenoX to solve the PDE problem as non-linear

Run with --help for further explanations.

$The program can also be executed remotely either

- on a running server through a SSH session

in serial directly invoking the

feenoxbinaryin parallel through the

mpiexecwrapper, e.g.mpiexec -n 4 feenox input.fee

- spawned by a daemon listening to a network requests,

- in a container as part of a provisioning script,

- in many other ways.

As explained in the help message, FeenoX can read the input from the

standard input if - is specified as the input path. This is

useful in scripts where small calculations are needed, e.g.

$ a=3

$ echo "PRINT 1/$a" | feenox -

0.333333

$ FeenoX provides mechanisms to inform its progress by writing certain

information to devices or files, which in turn can be monitored remotely

or even trigger server actions. Progress can be as simple as an ASCII

bar (triggered with --progress in the command line or with

the keyword PROGRESS

in the input file) to more complex mechanisms like writing the status in

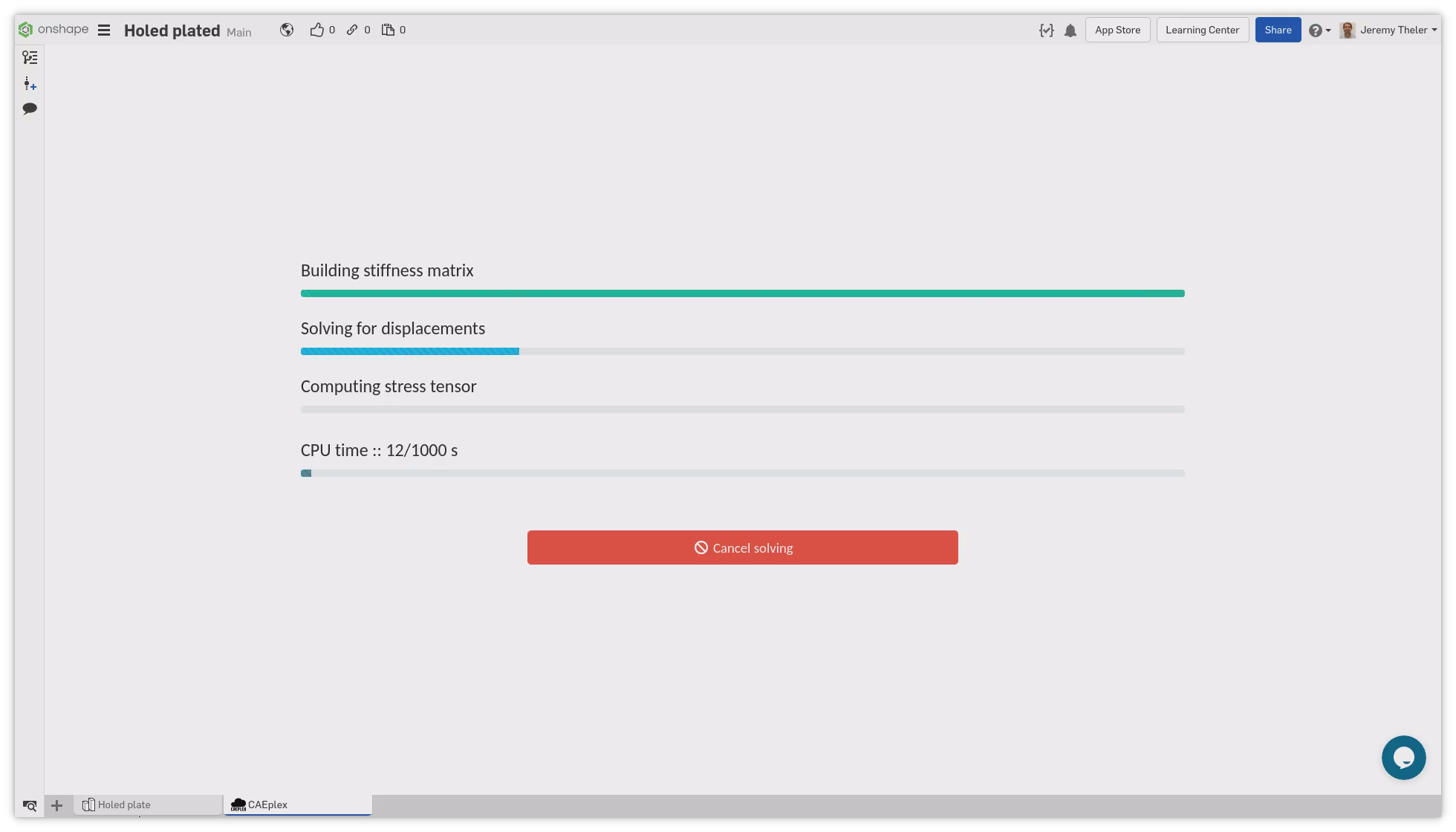

a shared memory segment. Fig. 10 shows how the CAEplex platform shows the progress

interactively in its web-based interface.

Regarding its execution, there are three ways of solving problems:

- direct execution

- parametric runs, and

- optimization loops.

2.2.1 Direct execution

When directly executing FeenoX, one gives a single argument to the executable with the path to the main input file. For example, the following input computes the first twenty numbers of the Fibonacci sequence using the closed-form formula

f(n) = \frac{\varphi^n - (1-\varphi)^n}{\sqrt{5}}

where \varphi=(1+\sqrt{5})/2 is the Golden ratio:

# the Fibonacci sequence using the closed-form formula as a function

phi = (1+sqrt(5))/2

f(n) = (phi^n - (1-phi)^n)/sqrt(5)

PRINT_FUNCTION f MIN 1 MAX 20 STEP 1FeenoX can be directly executed to print the function f(n) for n=1,\dots,20 both to the standard output and

to a file named one (because it is the first way of solving

Fibonacci with Feenox):

$ feenox fibo_formula.fee | tee one

1 1

2 1

3 2

4 3

5 5

6 8

7 13

8 21

9 34

10 55

11 89

12 144

13 233

14 377

15 610

16 987

17 1597

18 2584

19 4181

20 6765

$Now, we could also have computed these twenty numbers by using the

direct definition of the sequence into a vector \vec{f} of size 20. This time we redirect the

output to a file named two:

# the fibonacci sequence as a vector

VECTOR f SIZE 20

f[i]<1:2> = 1

f[i]<3:vecsize(f)> = f[i-2] + f[i-1]

PRINT_VECTOR i f$ feenox fibo_vector.fee > two

$ Finally, we print the sequence as an iterative problem and check that the three outputs are the same:

# the fibonacci sequence as an iterative problem

static_steps = 20

#static_iterations = 1476 # limit of doubles

IF step_static=1|step_static=2

f_n = 1

f_nminus1 = 1

f_nminus2 = 1

ELSE

f_n = f_nminus1 + f_nminus2

f_nminus2 = f_nminus1

f_nminus1 = f_n

ENDIF

PRINT step_static f_n$ feenox fibo_iterative.fee > three

$ diff one two

$ diff two three

$These three calls were examples of direct execution of FeenoX: a single call with a single argument to solve a single fixed problem.

2.2.2 Parametric

To use FeenoX in a parametric run, one has to successively call the

executable passing the main input file path in the first argument

followed by an arbitrary number of parameters. These extra parameters

will be expanded as string literals $1, $2,

etc. appearing in the input file. For example, if hello.fee

is

PRINT "Hello $1!"then

$ feenox hello.fee World

Hello World!

$ feenox hello.fee Universe

Hello Universe!

$To have an actual parametric run, an external loop has to

successively call FeenoX with the parametric arguments. For example, say

this file cantilever.fee fixes the face called “left” and

sets a load in the negative z direction

of a mesh called cantilever-$1-$2.msh. The output is a

single line containing the number of nodes of the mesh and the

displacement in the vertical direction w(500,0,0) at the center of the cantilever’s

free face:

PROBLEM elastic 3D

READ_MESH cantilever-$1-$2.msh # in meters

E = 2.1e11 # Young modulus in Pascals

nu = 0.3 # Poisson's ratio

BC left fixed

BC right tz=-1e5 # traction in Pascals, negative z

SOLVE_PROBLEM

# z-displacement (components are u,v,w) at the tip vs. number of nodes

PRINT nodes w(500,0,0) "\# $1 $2"

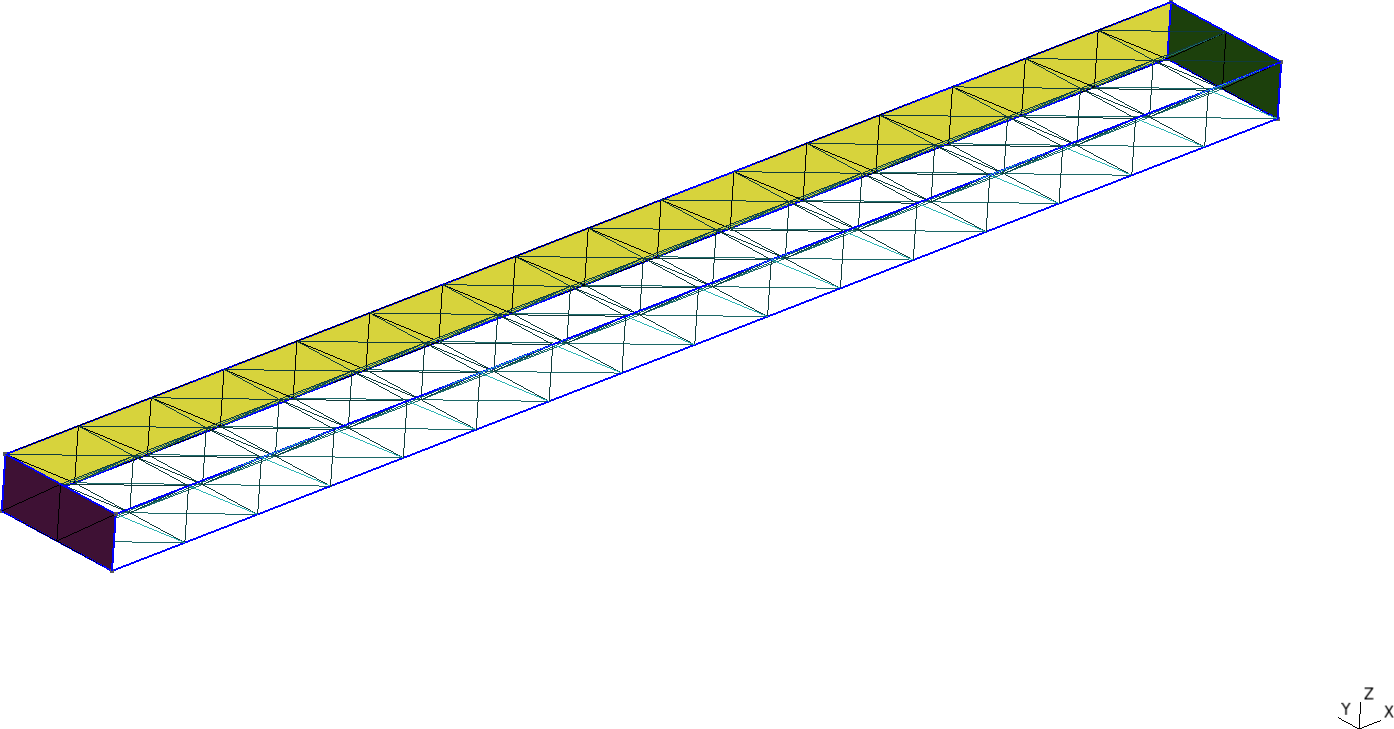

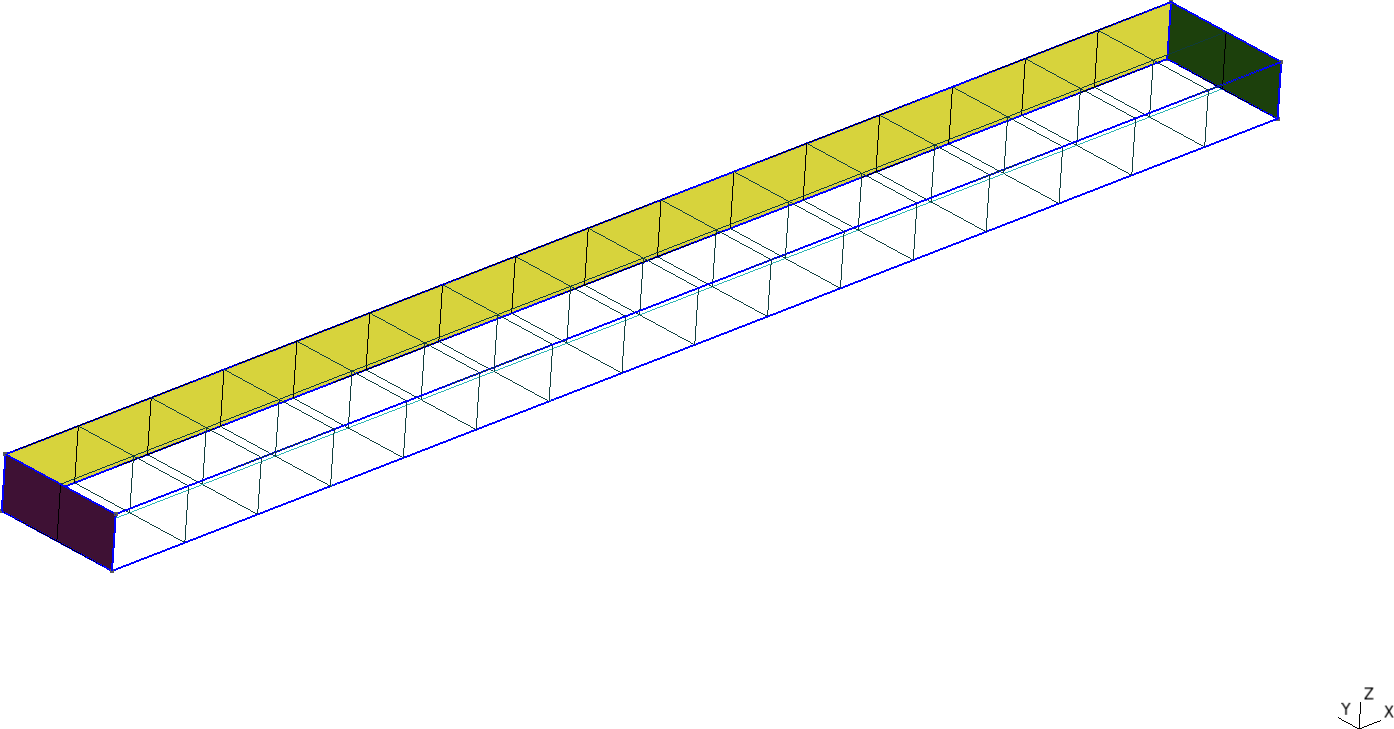

Figure 11: Cantilevered beam meshed with structured tetrahedra and hexahedra. a — Tetrahedra, b — Hexahedra

Now the following Bash script

first calls Gmsh to create the meshes

cantilever-${element}-${c}.msh where

${element}: tet4, tet10, hex8, hex20, hex27${c}: 1,2,,10

It then calls FeenoX with the input above and passes

${element} and ${c} as extra arguments, which

then are expanded as $1 and $2

respectively.

#!/bin/bash

rm -f *.dat

for element in tet4 tet10 hex8 hex20 hex27; do

for c in $(seq 1 10); do

# create mesh if not already cached

mesh=cantilever-${element}-${c}

if [ ! -e ${mesh}.msh ]; then

scale=$(echo "PRINT 1/${c}" | feenox -)

gmsh -3 -v 0 cantilever-${element}.geo -clscale ${scale} -o ${mesh}.msh

fi

# call FeenoX

feenox cantilever.fee ${element} ${c} | tee -a cantilever-${element}.dat

done

doneAfter the execution of the script, thanks to the design decision

(explained in sec. 3.2) that output is 100% defined by the user (in this

case with the PRINT

instruction), one has several files

cantilever-${element}.dat files. When plotted, these show

the shear locking effect of fully-integrated first-order elements as

illustrated in fig. 12. The theoretical Euler-Bernoulli

result is just a reference as, among other things, it does not take into

account the effect of the material’s Poisson’s

ratio. Note that the abscissa shows the number of nodes,

which are proportional to the number of degrees of freedom (i.e. the

size of the problem matrix) and not the number of elements,

which is irrelevant here and in most problems.

2.2.3 Optimization loops

Optimization loops work very much like parametric runs from the FeenoX point of view. The difference is mainly on the calling script that has to implement a certain optimization algorithm such as conjugate gradients, Nelder-Mead, simulated annealing, genetic algorithms, etc. to choose which parameters to pass to FeenoX as command-line argument. The only particularity on FeenoX’s side is that since the next argument that the optimization loop will pass might depend on the result of the current step, care has to be taken in order to be able to return back to the calling script whatever results it needs in order to compute the next arguments. This is usually just the scalar being optimized for, but it can also include other results such as derivatives or other relevant data.

To illustrate how to use FeenoX in an optimization loop, let us consider the problem of finding the length \ell_1 of a tuning fork (fig. 13) such that the fundamental frequency on a free-free oscillation is equal to the base A frequency at 440 Hz.

This extremely simple input file (rule of simplicity sec. 11.5) solves the free-free mechanical modal problem (i.e. without any Dirichlet boundary condition) and prints the fundamental frequency:

PROBLEM modal 3D MODES 1 # only one mode needed

READ_MESH fork.msh # in [m]

E = 2.07e11 # in [Pa]

nu = 0.33

rho = 7829 # in [kg/m^2]

# no BCs! It is a free-free vibration problem

SOLVE_PROBLEM

# write back the fundamental frequency to stdout

PRINT f(1)Note that in this particular case, the FeenoX input files does not

expand any command-line argument. The trick is that the mesh file

fork.msh is overwritten in each call of the optimization

loop. Since this time the loop is slightly more complex than in the

parametric run of the last section, we now use Python. The function

create_mesh() first creates a CAD model of the fork with

geometrical parameters r, w, \ell_1

and \ell_2. It then meshes the CAD

using n structured hexahedra through

the fork’s thickness. Both the CAD and the mesh are created using the

Gmsh Python API. The detailed steps between

gmsh.initialize() and gmsh.finalize() are not

shown here, just the fact that this function overwrites the previous

mesh and always writes it into the file called fork.msh

which is the one that fork.fee reads. Hence, there is no

need to pass command-liner arguments to FeenoX. The full implementation

of the function is available in the examples directory of the FeenoX

distribution.

import math

import gmsh

import subprocess # to call FeenoX and read back

def create_mesh(r, w, l1, l2, n):

gmsh.initialize()

...

gmsh.write("fork.msh")

gmsh.finalize()

return len(nodes)

def main():

target = 440 # target frequency

eps = 1e-2 # tolerance

r = 4.2e-3 # geometric parameters

w = 3e-3

l1 = 30e-3

l2 = 60e-3

for n in range(1,7): # mesh refinement level

l1 = 60e-3 # restart l1 & error

error = 60

while abs(error) > eps: # loop

l1 = l1 - 1e-4*error

# mesh with Gmsh Python API

nodes = create_mesh(r, w, l1, l2, n)

# call FeenoX and read scalar back

# TODO: FeenoX Python API (like Gmsh)

result = subprocess.run(['feenox', 'fork.fee'], stdout=subprocess.PIPE)

freq = float(result.stdout.decode('utf-8'))

error = target - freq

print(nodes, l1, freq)Since the computed frequency depends both on the length \ell_1 and on the mesh refinement level n, there are actually two nested loops: one parametric over n=1,2\dots,7 and the optimization loop itself that tries to find \ell_1 so as to obtain a frequency equal to 440 Hz within 0.01% of error.

$ python fork.py > fork.dat

$Note that the approach used here is to use Gmsh Python API to build

the mesh and then fork the FeenoX executable to solve the fork (no pun

intended). There are plans to provide a Python API for FeenoX so the

problem can be set up, solved and the results read back directly from

the script instead of needing to do a fork+exec, read back the standard

output as a string and then convert it to a Python

float.

Fig. 14 shows the results of the combination of the optimization loop over \ell_1 and a parametric run over n. The difference for n=6 and n=7 is in the order of one hundredth of millimeter.

2.3 Efficiency

As required in the previous section, it is mandatory to be able to execute the tool on one or more remote servers. The computational resources needed from this server, i.e. costs measured in

- CPU/GPU time

- random-access memory

- long-term storage

- etc.

needed to solve a problem should be comparable to other similar state-of-the-art cloud-based script-friendly finite-element tools.

One of the most widely known quotations in computer science is that one that says “premature optimization is the root of all evil.” that is an extremely over-simplified version of Donald E. Knuth’s analysis in his The Art of Computer Programming. Bottom line is that the programmer should not not spend too much time trying to optimize code based on hunches but based on profiling measurements. Yet a disciplined programmer can tell when an algorithm will be way too inefficient (say something that scales up like O(n^2)) and how small changes can improve performance (say by understanding how caching levels work in order to implement faster nested loops). It is also true that usually an improvement in one aspect leads to a deterioration in another one (e.g. a decrease in CPU time by caching intermediate results in an increase of RAM usage).

Even though FeenoX is still evolving so it could be premature in many cases, it is informative to compare running times and memory consumption when solving the same problem with different cloud-friendly FEA programs. In effect, a serial single-thread single-host comparison of resource usage when solving the NAFEMS LE10 problem introduced above was performed, using both unstructured tetrahedral and structured hexahedral meshes. Fig. 15 shows two figures of the many ones contained in the detailed report. In general, FeenoX using the iterative approach based on PETSc’s Geometric-Algebraic Multigrid Preconditioner and a conjugate gradients solver is faster for (relatively) large problems at the expense of a larger memory consumption. The curves that use MUMPS confirm the well-known theoretical result that direct linear solvers are robust but not scalable.

Figure 15: Resource consumption when solving the NAFEMS LE10 problem in the cloud for tetrahedral meshes.. a — Wall time vs. number of degrees of freedom, b — Memory vs. number of degrees of freedom

Regarding storage, FeenoX needs space to store the input file

(negligible), the mesh file in .msh format (which can be

either ASCII or binary) and the optional output files in

.msh or .vtu/.vtk formats. All of

these files can be stored gzip-compressed and un-compressed on demand by

exploiting FeenoX’s script-friendliness using proper calls to

gzip before and/or after calling the feenox

binary.

2.4 Scalability

The tool ought to be able to start solving small problems first to check the inputs and outputs behave as expected and then allow increasing the problem size up in order to achieve to the desired accuracy of the results. As mentioned in sec. 2, large problem should be split among different computers to be able to solve them using a finite amount of per-host computational power (RAM and CPU).

When for a fixed problem the mesh is refined over and over, more and more computational resources are needed to solve it (and to obtain more accurate results, of course). Parallelization can help to

- reduce the wall time needed to solve a problem by using several processors at the same time

- allow to solve big problems that would not fit into a single computer by splitting them into smaller parts, each of them fitting in a single computer

There are three types of parallelization schemes:

- Shared-memory systems (OpenMP)

-

several processing units sharing a single memory address space

- Distributed systems (MPI)

-

several computational units, each with their own processing units and memory, inter-connected with high-speed network hardware

- Graphical processing units (GPU)

-

used as co-processors to solve numerically-intensive problems

In principle, any of these three schemes can be used to reduce the wall time (a). But only the distributed systems scheme allows to solve arbitrarily big problems (b).

It might seem that the most effective approach to solve a large problem is to use OpenMP (not to be confused with OpenMPI!) among threads running in processors that share the memory address space and to use MPI among processes running in different hosts. But even though this hybrid OpenMP+MPI scheme is possible, there are at least three main drawbacks with respect to a pure MPI approach:

- the overall performance is not be significantly better

- the amount of lines of code that has to be maintained is more than doubled

- the number of possible points of synchronization failure increases

In many ways, the pure MPI mode has fewer synchronizations and thus should perform better. Hence, FeenoX uses MPI (mainly through PETSc and SLEPc) to handle large parallel problems.

To illustrate FeenoX’s MPI features, let us consider the following input file (which is part of FeenoX’s tests suite):

PRINTF_ALL "Hello MPI World!"The instruction PRINTF_ALL (at the end of the day, it is

a verb) asks all the processes to write the

printf-formatted arguments in the standard output. A prefix

is added to each line with the process id and the name of the host. When

running FeenoX with this input file through mpiexec in an

AWS server which has already been properly configured to connect to

another one and split the MPI processes, we get:

ubuntu@ip-172-31-44-208:~/mpi/hello$ mpiexec --verbose --oversubscribe --hostfile hosts -np 4 ./feenox hello_mpi.fee

[0/4 ip-172-31-44-208] Hello MPI World!

[1/4 ip-172-31-44-208] Hello MPI World!

[2/4 ip-172-31-34-195] Hello MPI World!

[3/4 ip-172-31-34-195] Hello MPI World!

ubuntu@ip-172-31-44-208:~/mpi/hello$ That is to say,host ip-172-31-44-208 spawns two local

processes feenox and, at the same time, asks host

ip-172-31-34-195 to create two new processes in it. This

scheme would allow to solve a problem in parallel where the CPU and RAM

loads are split into two different servers.

We can used Gmsh’s tutorial t21 that illustrated the

concept of domain decomposition (DDM) to show another aspect of how MPI

parallelization works in FeenoX. In effect, let us consider the mesh

from fig. 16 that consists of two non-dimensional squares of size 1 \times 1 and let us say we want to compute

the integral of the constant 1 over the surface to obtain the numerical

result 2.

READ_MESH t21.msh

INTEGRATE 1 RESULT two

PRINTF_ALL "%g" twoIn this case, the instruction INTEGRATE is executed in

parallel where each process computes the local contribution and, before

moving into the next instruction (PRINTF_ALL), all

processes synchronize and sum up all these contributions (i.e. they

perform a sum reduction) and all the processes obtain the global result

in the variable two:

$ mpiexec -n 2 feenox t21.fee

[0/2 tom] 2

[1/2 tom] 2

$ mpiexec -n 4 feenox t21.fee

[0/4 tom] 2

[1/4 tom] 2

[2/4 tom] 2

[3/4 tom] 2

$ mpiexec -n 6 feenox t21.fee

[0/6 tom] 2

[1/6 tom] 2

[2/6 tom] 2

[3/6 tom] 2

[4/6 tom] 2

[5/6 tom] 2

$ To illustrate what is happening under the hood, let us temporarily modify the FeenoX source code so that each process shows the local contribution:

$ mpiexec -n 2 feenox t21.fee

[process 0] my local integral is 0.996699

[process 1] my local integral is 1.0033

[0/2 tom] 2

[1/2 tom] 2

$ mpiexec -n 3 feenox t21.fee

[process 0] my local integral is 0.658438

[process 1] my local integral is 0.672813

[process 2] my local integral is 0.668749

[0/3 tom] 2

[1/3 tom] 2

[2/3 tom] 2

$ mpiexec -n 4 feenox t21.fee

[process 0] my local integral is 0.505285

[process 1] my local integral is 0.496811

[process 2] my local integral is 0.500788

[process 3] my local integral is 0.497116

[0/4 tom] 2

[1/4 tom] 2

[2/4 tom] 2

[3/4 tom] 2

$ mpiexec -n 5 feenox t21.fee

[process 0] my local integral is 0.403677

[process 1] my local integral is 0.401883

[process 2] my local integral is 0.399116

[process 3] my local integral is 0.400042

[process 4] my local integral is 0.395281

[0/5 tom] 2

[1/5 tom] 2

[2/5 tom] 2

[3/5 tom] 2

[4/5 tom] 2

$ mpiexec -n 6 feenox t21.fee

[process 0] my local integral is 0.327539

[process 1] my local integral is 0.330899

[process 2] my local integral is 0.338261

[process 3] my local integral is 0.334552

[process 4] my local integral is 0.332716

[process 5] my local integral is 0.336033

[0/6 tom] 2

[1/6 tom] 2

[2/6 tom] 2

[3/6 tom] 2

[4/6 tom] 2

[5/6 tom] 2

$ Note that in the cases with 4 and 5 processes, the number of partitions P is not a multiple of the number of processes N. Anyway, FeenoX is able to distribute the load is able to distribute the load among the N processes, even though the efficiency is slightly less than in the other cases. :::

When solving PDEs, FeenoX builds the local matrices and vectors and then asks PETSc to assemble the global objects by sending non-local information as MPI messages. This way, all processes have contiguous rows as local data and the system of equations can be solved in parallel using the distributed system paradigm.

We can show that both

- the wall time, and

- the per-process memory

decrease when running a fixed-sized problem with MPI in parallel using the IAEA 3D PWR benchmark:

PROBLEM neutron_diffusion 3D GROUPS 2

DEFAULT_ARGUMENT_VALUE 1 quarter

READ_MESH iaea-3dpwr-$1.msh

MATERIAL fuel1 D1=1.5 D2=0.4 Sigma_s1.2=0.02 Sigma_a1=0.01 Sigma_a2=0.08 nuSigma_f2=0.135

MATERIAL fuel2 D1=1.5 D2=0.4 Sigma_s1.2=0.02 Sigma_a1=0.01 Sigma_a2=0.085 nuSigma_f2=0.135

MATERIAL fuel2rod D1=1.5 D2=0.4 Sigma_s1.2=0.02 Sigma_a1=0.01 Sigma_a2=0.13 nuSigma_f2=0.135

MATERIAL reflector D1=2.0 D2=0.3 Sigma_s1.2=0.04 Sigma_a1=0 Sigma_a2=0.01 nuSigma_f2=0

MATERIAL reflrod D1=2.0 D2=0.3 Sigma_s1.2=0.04 Sigma_a1=0 Sigma_a2=0.055 nuSigma_f2=0

BC vacuum vacuum=0.4692

BC mirror mirror

SOLVE_PROBLEM

WRITE_RESULTS FORMAT vtk

PRINT "geometry = $1"

PRINTF " keff = %.5f" keff

PRINTF " nodes = %g" nodes

PRINTF " DOFs = %g" total_dofs

PRINTF " memory = %.1f Gb (local) %.1f Gb (global)" mpi_memory_local() mpi_memory_global()

PRINTF " wall = %.1f sec" wall_time()$ mpiexec -n 1 feenox iaea-3dpwr.fee quarter

geometry = quarter

keff = 1.02918

nodes = 70779

DOFs = 141558

[0/1 tux] memory = 2.3 Gb (local) 2.3 Gb (global)

wall = 26.2 sec

$ mpiexec -n 2 feenox iaea-3dpwr.fee quarter

geometry = quarter

keff = 1.02918

nodes = 70779

DOFs = 141558

[0/2 tux] memory = 1.5 Gb (local) 3.0 Gb (global)

[1/2 tux] memory = 1.5 Gb (local) 3.0 Gb (global)

wall = 17.0 sec

$ mpiexec -n 4 feenox iaea-3dpwr.fee quarter

geometry = quarter

keff = 1.02918

nodes = 70779

DOFs = 141558

[0/4 tux] memory = 1.0 Gb (local) 3.9 Gb (global)

[1/4 tux] memory = 0.9 Gb (local) 3.9 Gb (global)

[2/4 tux] memory = 1.1 Gb (local) 3.9 Gb (global)

[3/4 tux] memory = 0.9 Gb (local) 3.9 Gb (global)

wall = 13.0 sec

$ 2.5 Flexibility

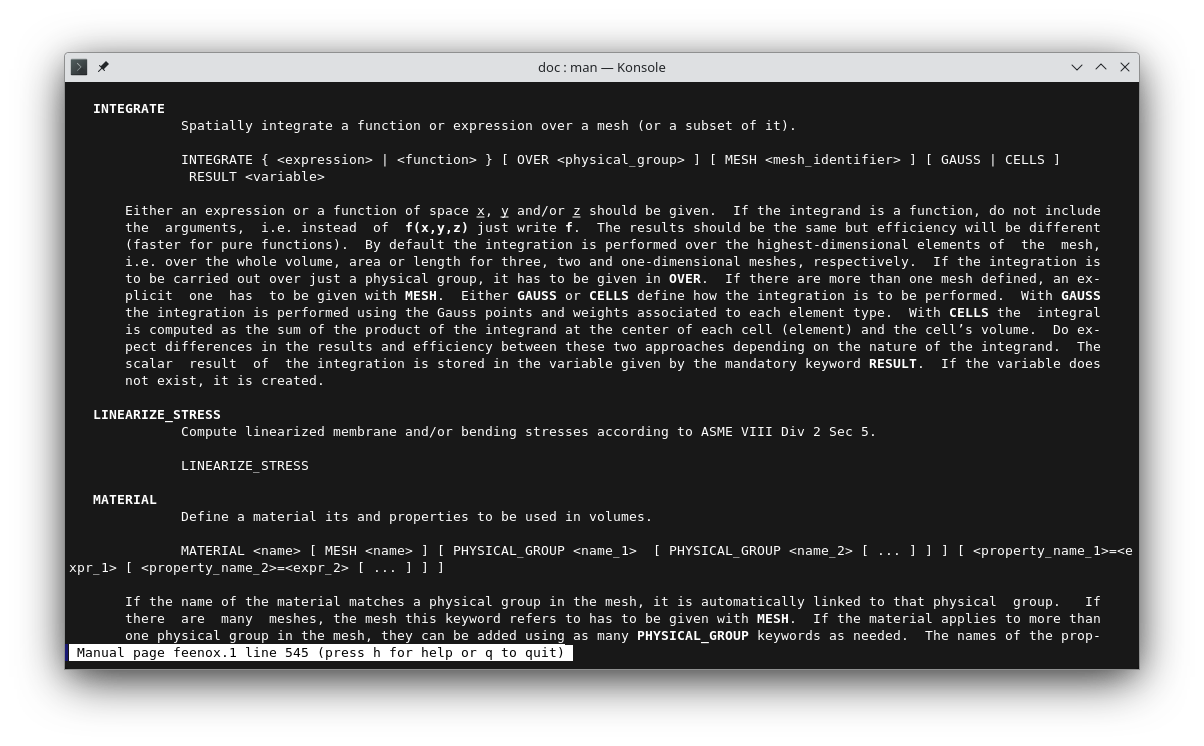

The tool should be able to handle engineering problems involving different materials with potential spatial and time-dependent properties, such as temperature-dependent thermal expansion coefficients and/or non-constant densities. Boundary conditions must be allowed to depend on both space and time as well, like non-uniform pressure loads and/or transient heat fluxes.

The third-system effect mentioned in sec. 2 involves more than ten

years of experience in the nuclear industry,4

where complex dependencies of multiple material properties over space

through intermediate distributions (temperature, neutronic poisons,

etc.) and time (control rod positions, fuel burn-up, etc.) are

mandatory. One of the cornerstone design decisions in FeenoX is that

everything is an expression (sec. 3.1.5). Here,

“everything” means any location in the input file where a numerical

value is expected. The most common use case is in the PRINT

keyword. For example, the Sophomore’s

dream (in contrast to Freshman’s

dream) identity

\int_{0}^{1} x^{-x} \, dx = \sum_{n=1}^{\infty} n^{-n}

can be illustrated like this:

VAR x

PRINT %.7f integral(x^(-x),x,0,1)

VAR n

PRINT %.7f sum(n^(-n),n,1,1000)$ feenox sophomore.fee

1.2912861

1.2912860

$Of course most engineering problems will not need explicit integrals—although a few of them do—but some might need summation loops, so it is handy to have these functionals available inside the FEA tool. This might seem to go against the “keep it simple” and “do one thing good” Unix principle, but definitely follows Alan Kay’s idea that “simple things should be simple, complex things should be possible” (further discussion in sec. 3.1.4).

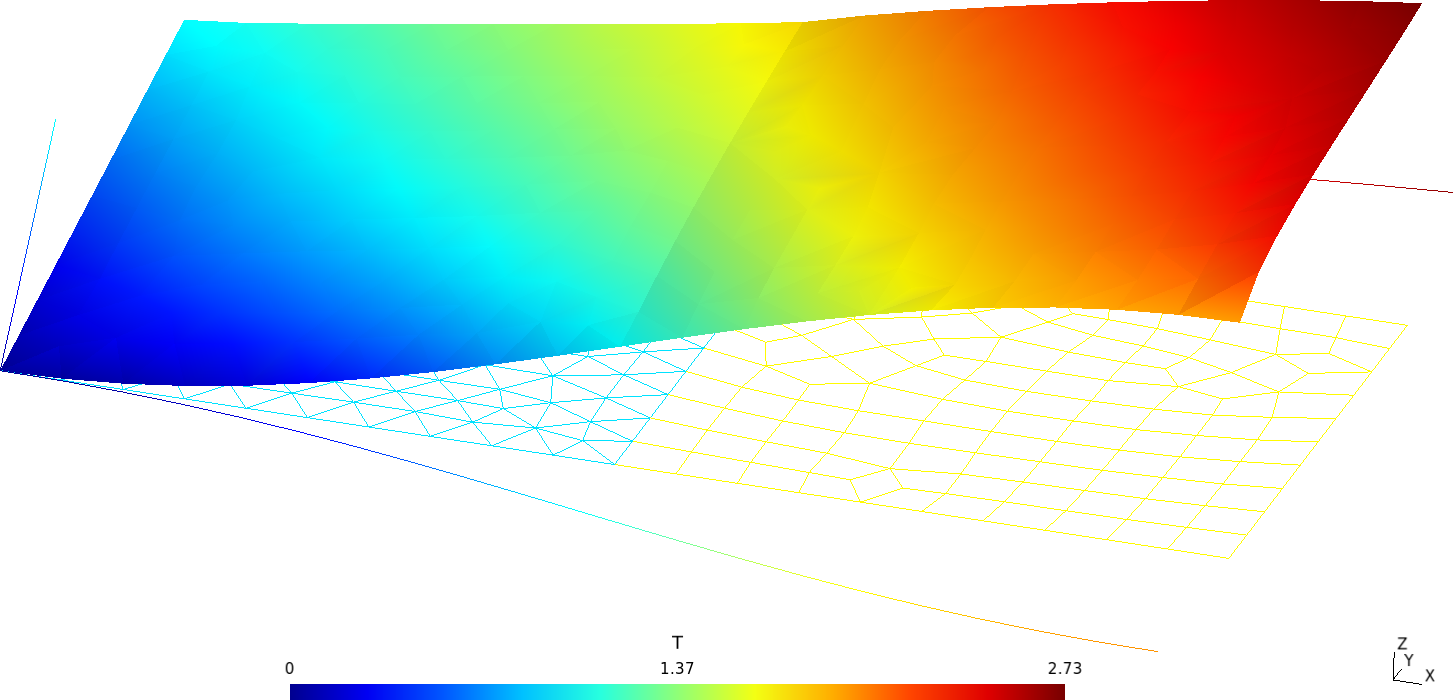

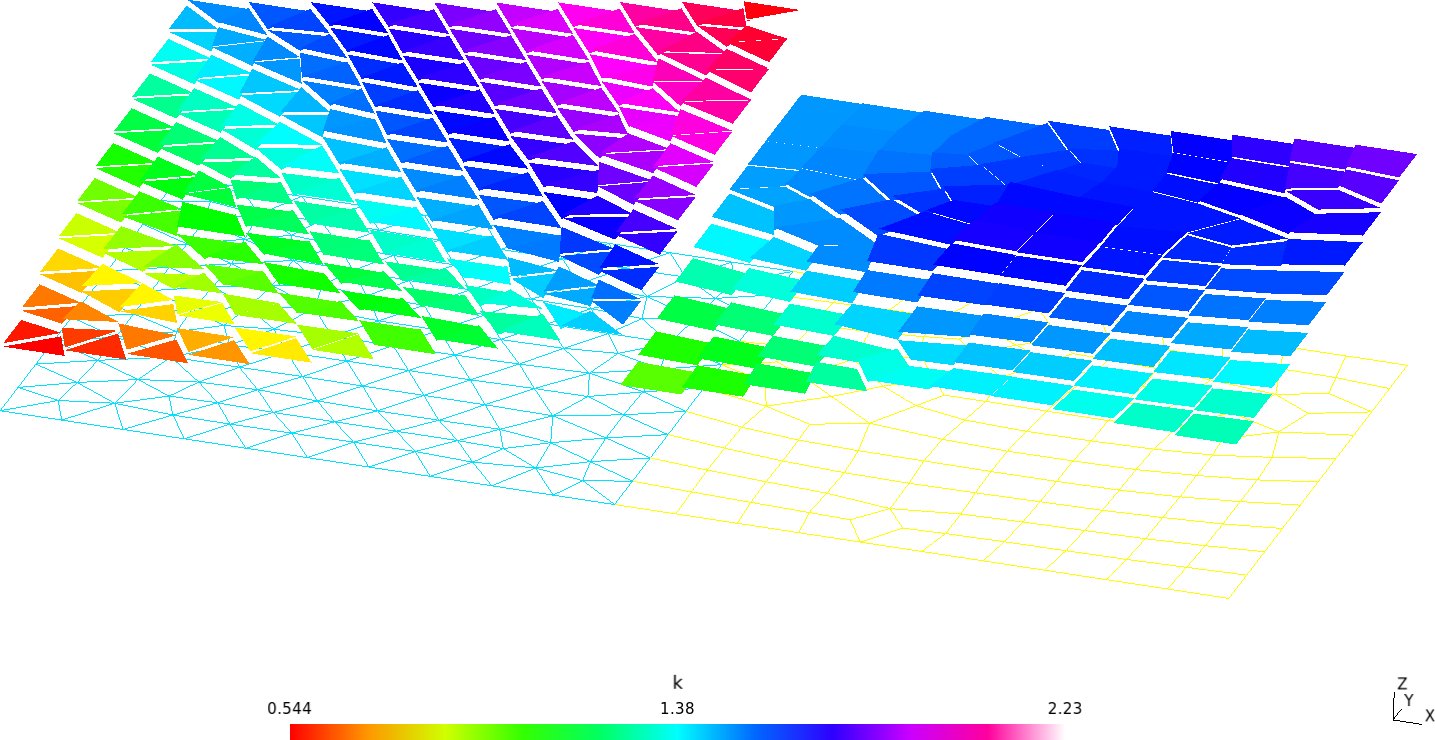

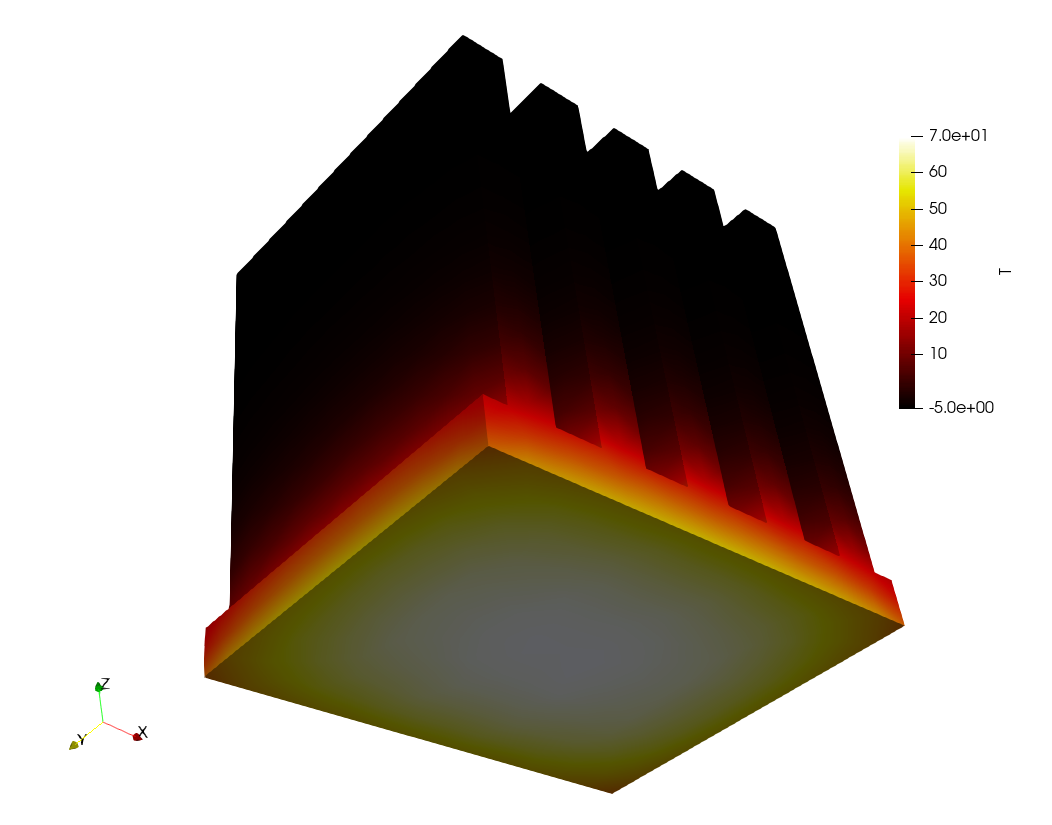

Flexibility in defining non-trivial material properties is illustrated with the following example, where two squares made of different dimensionless materials are juxtaposed in thermal contact (glued?) and subject to different boundary conditions at each of the four sides (fig. 17).

The yellow square is made of a certain material with a conductivity that depends algebraically (and fictitiously) the temperature like

k_\text{yellow}(x,y) = \frac{1}{2} + T(x,y)

The cyan square has a space-dependent temperature given by a table of scattered data as a function of the spatial coordinates x and y (origin is left bottom corner of the yellow square) without any particular structure on the definition points:

| x | y | k_\text{cyan}(x,y) |

|---|---|---|

| 1 | 0 | 1.0 |

| 1 | 1 | 1.5 |

| 2 | 0 | 1.3 |

| 2 | 1 | 1.8 |

| 1.5 | 0.5 | 1.7 |

The cyan square generates a temperature-dependent power density (per unit area) given by

q^{\prime \prime}_\text{cyan}(x,y) = 0.2 \cdot T(x,y)

The yellow one does not generate any power so q^{\prime \prime}_\text{yellow} = 0. Boundary conditions are

\begin{cases} T(x,y) = y & \text{at the left edge $y=0$} \\ T(x,y) = 1-\cos\left(\frac{1}{2}\pi \cdot x\right) & \text{at the bottom edge $x=0$} \\ q'(x,y) = 2-y & \text{at the right edge $x=2$} \\ q'(x,y) = 1 & \text{at the top edge $y=1$} \\ \end{cases}

The input file illustrate how flexible FeenoX is and, again, how the problem definition in a format that the computer can understand resembles the humanly-written formulation of the original engineering problem:

PROBLEM thermal 2d # heat conduction in two dimensions

READ_MESH two-squares.msh

k_yellow(x,y) = 1/2+T(x,y) # thermal conductivity

FUNCTION k_cyan(x,y) INTERPOLATION shepard DATA {

1 0 1.0

1 1 1.5

2 0 1.3

2 1 1.8

1.5 0.5 1.7 }

q_cyan(x,y) = 1-0.2*T(x,y) # dissipated power density

q_yellow(x,y) = 0

BC left T=y # temperature (dirichlet) bc

BC bottom T=1-cos(pi/2*x)

BC right q=2-y # heat flux (neumann) bc

BC top q=1

SOLVE_PROBLEM

WRITE_MESH two-squares-results.msh T #CELLS kNote that FeenoX is flexible enough to…

- handle mixed meshes (the yellow square is meshed with triangles and the other one with quadrangles)

- use point-wise defined properties even though there is not

underlying structure nor topology for the points where the data is

defined (FeenoX could have read data from a

.mshor.vtkfile respecting the underlying topology) - understand that the problem is non-linear so as to use PETSc’s SNES framework automatically (the conductivity and power source depend on the temperature).

Figure 18: Temperature (main result) and conductivity for the two-squares thermal problem.. a — Temperature defined at nodes, b — Conductivity defined at cells

In the very same sense that variables x, y

and z appearing in the input refer to the spatial

coordinates x, y and z

respectively, the special variable t refers to the

time t. The requirement of allowing

time-dependent boundary conditions can be illustrated by solving the

NAFEMS T3 one-dimensional transient heat transfer benchmark. It consists

of a slab of 0.1 meters long subject to a fixed homogeneous temperature

on one side, i.e.

T(x=0)=0~\text{°C}

and to a transient temperature

T(x=0.1~\text{m},t)=100~\text{°C} \cdot \sin\left( \frac{\pi \cdot t}{40~\text{s}}\right)

at the other side. There is zero internal heat generation, at t=0 all temperature is equal to 0°C (sic) and conductivity, specific heat and density are constant and uniform. The problem asks for the temperature at location x=0.08~\text{m} at time t=32~\text{s}. The reference result is T(0.08~\text{m},32~\text{s})=36.60~\text{°C}.

PROBLEM thermal DIM 1 # NAFEMS-T3 benchmark: 1d transient heat conduction

READ_MESH slab-0.1m.msh

end_time = 32 # transient up to 32 seconds

T_0(x) = 0 # initial condition "all temperature is equal to 0°C"

# prescribed temperatures as boundary conditions

BC left T=0

BC right T=100*sin(pi*t/40)

# uniform and constant properties

k = 35.0 # conductivity [W/(m K)]

cp = 440.5 # heat capacity [J/(kg K)]

rho = 7200 # density [kg/m^3]

SOLVE_PROBLEM

# print detailed evolution into an ASCII file

PRINT FILE nafems-t3.dat %.3f t dt %.2f T(0.05) T(0.08) T(0.1)

# print the asked result into the standard output

IF done

PRINT "T(0.08m,32s) = " T(0.08) "ºC"

ENDIF$ gmsh -1 slab-0.1m.geo

[...]

Info : Done meshing 1D (Wall 0.000213023s, CPU 0.000836s)

Info : 61 nodes 62 elements

Info : Writing 'slab-0.1m.msh'...

Info : Done writing 'slab-0.1m.msh'

Info : Stopped on Sun Dec 12 19:41:18 2021 (From start: Wall 0.00293443s, CPU 0.02605s)

$ feenox nafems-t3.fee

T(0.08m,32s) = 36.5996 ºC

$ pyxplot nafems-t3.ppl

$Besides “everything is an expression,” FeenoX follows another cornerstone rule: simple problems ought to have simple inputs, akin to Unix’ rule of simplicity—that addresses the first half of Alan Kay’s quote above. This rule is further discussed in sec. 3.1.

2.6 Extensibility

It should be possible to add other problem types casted as PDEs (such as the Schröedinger equation) to the tool using a reasonable amount of time by one or more skilled programmers. The tool should also allow new models (such as non-linear stress-strain constitutive relationships) to be added as well.

When solving partial differential equations numerically, there are some steps that are independent of the type of PDE. For example,

- read the mesh

- evaluate the coefficients (i.e. material properties)

- solve the discretized systems of algebraic equations

- write the results

Even though FeenoX is written in C, it

makes extensive use of function

pointers to mimic C++’s virtual

methods. This way, depending on the problem type given with the PROBLEM

keyword, particular PDE-specific routines are called to

- initialize and set up solver options (steady-state/transient, linear/non-linear, regular/eigenproblem, etc.)

- parse boundary conditions given in the

BCkeyword - build elemental contributions for

- volumetric stiffness and/or mass matrices

- natural boundary conditions

- compute secondary fields (heat fluxes, strains and stresses, etc.) out of the gradients of the primary fields

- compute per-problem key performance indicators (min/max temperature, displacement, stress, etc.)

- write particular post-processing outputs

Indeed, each of the supported problems, namely

is a separate directory under src/pdes

that implements these “virtual” methods (recall that they are function

pointers) that are resolved at runtime when parsing the main input

file.

FeenoX was designed with separated common “mathematical” routines

from the particular “physical” ones in such a way that any of these

directories can be removed and the code would still compile. The

autogen.sh is in charge of

- parsing the source tree

- detect which are the available PDEs

- create appropriate snippets of code so the common mathematical framework can resolve the function pointers for the entry points

- build the

Makefile.amtemplates used by theconfigurescript

For example, if we removed the directory

src/pdes/thermal from a temporary clone of the main Git

repository then the whole bootstrapping, configuration and compilation

procedure would produce a feenox executable without the

ability to solve thermal problems:

~$ cd tmp/

~/tmp$ git clone https://github.com/seamplex/feenox

Cloning into 'feenox'...

remote: Enumerating objects: 6908, done.

remote: Counting objects: 100% (4399/4399), done.

remote: Compressing objects: 100% (3208/3208), done.

remote: Total 6908 (delta 3085), reused 2403 (delta 1126), pack-reused 2509

Receiving objects: 100% (6908/6908), 10.94 MiB | 6.14 MiB/s, done.

Resolving deltas: 100% (4904/4904), done.

~/tmp$ cd feenox

~/tmp/feenox$ rm -rf src/pdes/thermal/

~/tmp/feenox$ ./autogen.sh

creating Makefile.am... ok

creating src/Makefile.am... ok

calling autoreconf...

configure.ac:18: installing './compile'

configure.ac:15: installing './config.guess'

configure.ac:15: installing './config.sub'

configure.ac:17: installing './install-sh'

configure.ac:17: installing './missing'

parallel-tests: installing './test-driver'

src/Makefile.am: installing './depcomp'

done

~/tmp/feenox$ ./configure.sh

[...]

configure: creating ./config.status

config.status: creating Makefile

config.status: creating src/Makefile

config.status: creating doc/Makefile

config.status: executing depfiles commands

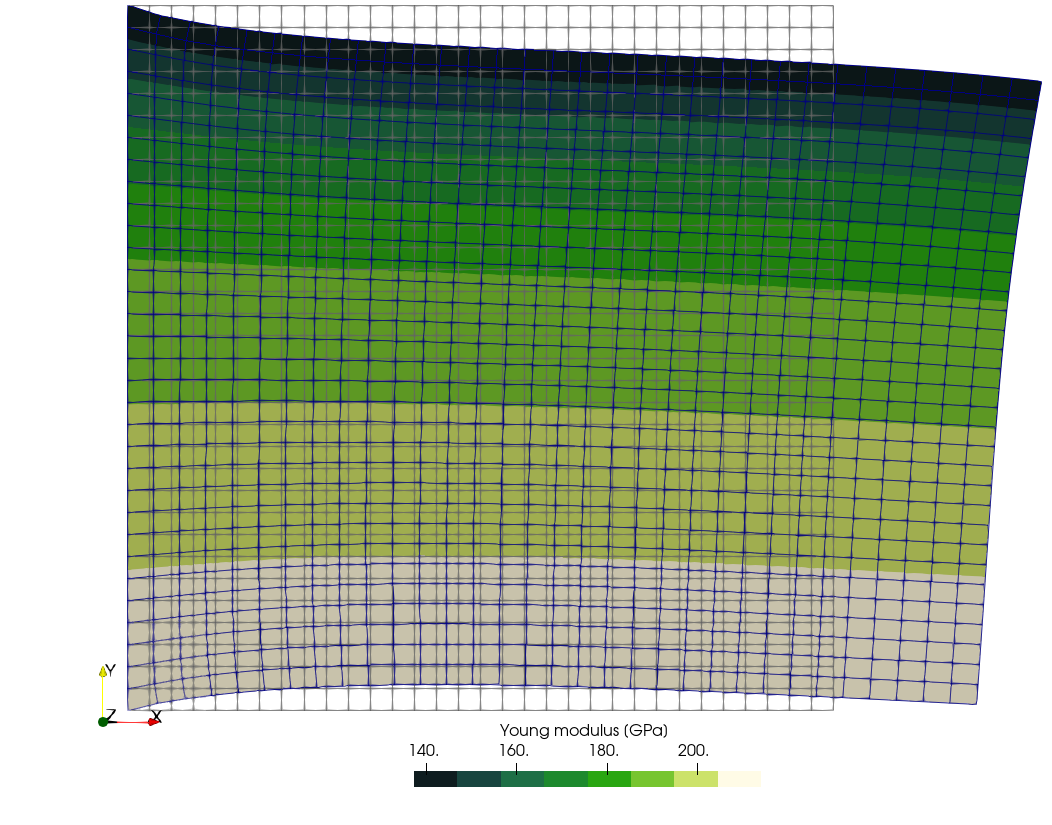

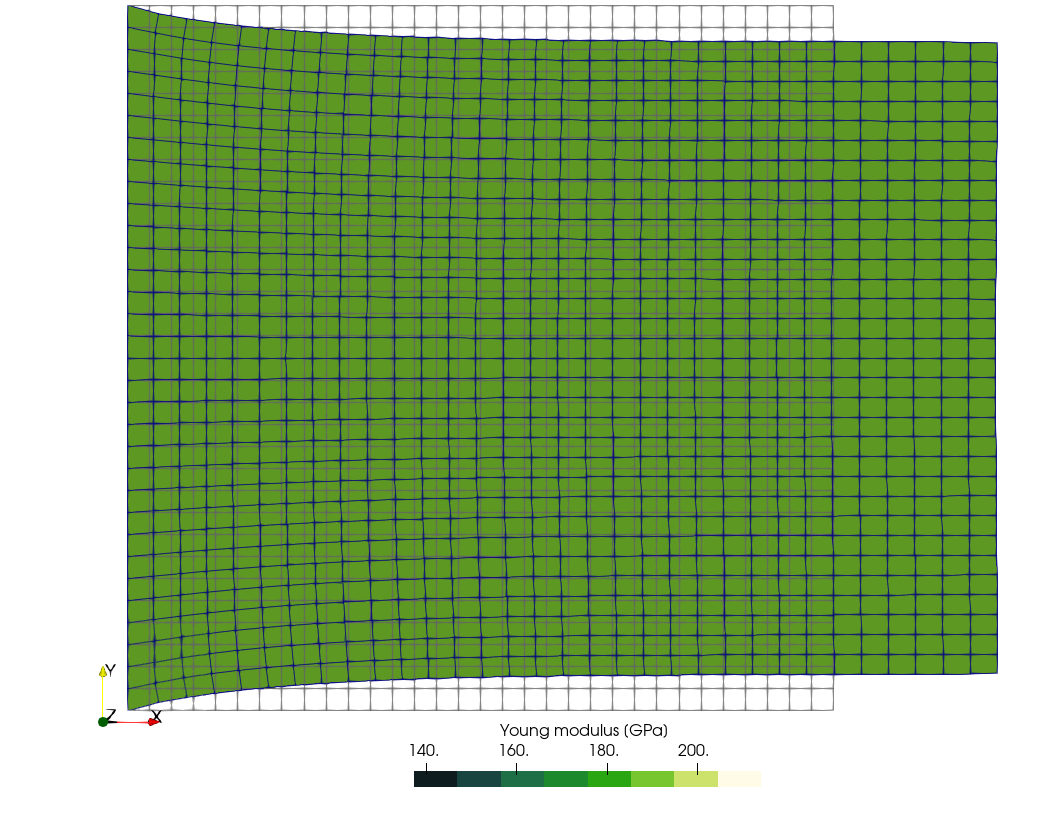

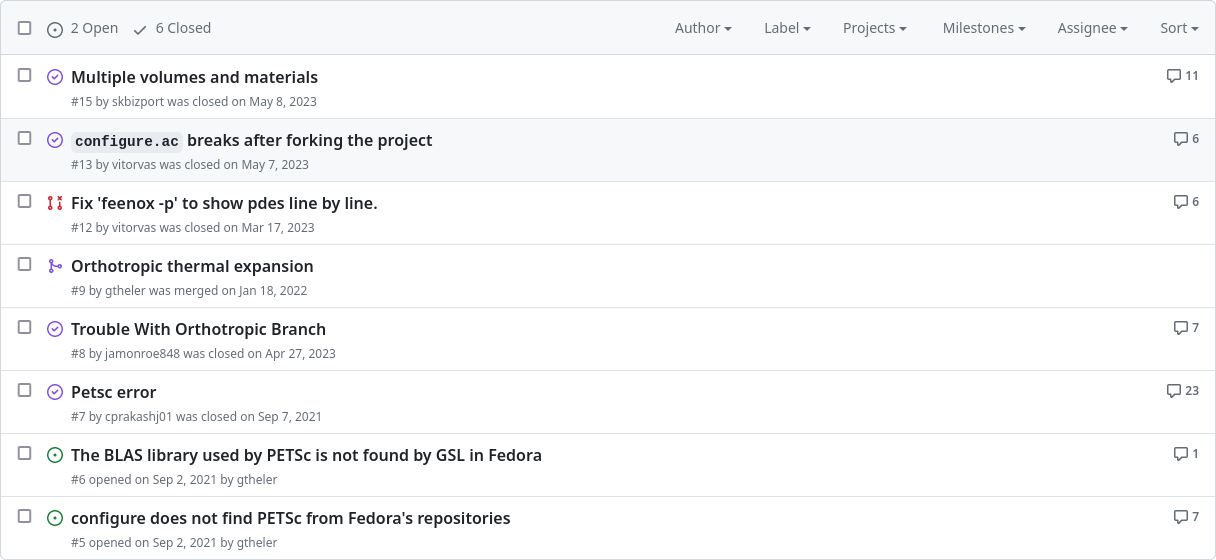

~/tmp/feenox$ make